Merge remote-tracking branch 'origin/master'

3

.github/FUNDING.yml

vendored

Normal file

@ -0,0 +1,3 @@

|

||||

# These are supported funding model platforms

|

||||

|

||||

github: [jendib]

|

||||

6

.gitignore

vendored

@ -11,6 +11,6 @@

|

||||

*.iml

|

||||

node_modules

|

||||

import_test

|

||||

docs-importer-linux

|

||||

docs-importer-macos

|

||||

docs-importer-win.exe

|

||||

teedy-importer-linux

|

||||

teedy-importer-macos

|

||||

teedy-importer-win.exe

|

||||

28

.travis.yml

@ -4,17 +4,27 @@ language: java

|

||||

before_install:

|

||||

- sudo add-apt-repository -y ppa:mc3man/trusty-media

|

||||

- sudo apt-get -qq update

|

||||

- sudo apt-get -y -q install ffmpeg mediainfo tesseract-ocr tesseract-ocr-fra tesseract-ocr-ita tesseract-ocr-kor tesseract-ocr-rus tesseract-ocr-ukr tesseract-ocr-spa tesseract-ocr-ara tesseract-ocr-hin tesseract-ocr-deu tesseract-ocr-pol tesseract-ocr-jpn tesseract-ocr-por tesseract-ocr-tha tesseract-ocr-jpn tesseract-ocr-chi-sim tesseract-ocr-chi-tra

|

||||

- sudo apt-get -y -q install ffmpeg mediainfo tesseract-ocr tesseract-ocr-fra tesseract-ocr-ita tesseract-ocr-kor tesseract-ocr-rus tesseract-ocr-ukr tesseract-ocr-spa tesseract-ocr-ara tesseract-ocr-hin tesseract-ocr-deu tesseract-ocr-pol tesseract-ocr-jpn tesseract-ocr-por tesseract-ocr-tha tesseract-ocr-jpn tesseract-ocr-chi-sim tesseract-ocr-chi-tra tesseract-ocr-nld tesseract-ocr-tur tesseract-ocr-heb tesseract-ocr-hun tesseract-ocr-fin tesseract-ocr-swe tesseract-ocr-lav tesseract-ocr-dan

|

||||

- sudo apt-get -y -q install haveged && sudo service haveged start

|

||||

after_success:

|

||||

- mvn -Pprod -DskipTests clean install

|

||||

- docker login -u $DOCKER_USER -p $DOCKER_PASS

|

||||

- export REPO=sismics/docs

|

||||

- export TAG=`if [ "$TRAVIS_BRANCH" == "master" ]; then echo "latest"; else echo $TRAVIS_BRANCH ; fi`

|

||||

- docker build -f Dockerfile -t $REPO:$COMMIT .

|

||||

- docker tag $REPO:$COMMIT $REPO:$TAG

|

||||

- docker tag $REPO:$COMMIT $REPO:travis-$TRAVIS_BUILD_NUMBER

|

||||

- docker push $REPO

|

||||

- |

|

||||

if [ "$TRAVIS_PULL_REQUEST" == "false" ]; then

|

||||

mvn -Pprod -DskipTests clean install

|

||||

docker login -u $DOCKER_USER -p $DOCKER_PASS

|

||||

export REPO=sismics/docs

|

||||

export TAG=`if [ "$TRAVIS_BRANCH" == "master" ]; then echo "latest"; else echo $TRAVIS_BRANCH ; fi`

|

||||

docker build -f Dockerfile -t $REPO:$COMMIT .

|

||||

docker tag $REPO:$COMMIT $REPO:$TAG

|

||||

docker tag $REPO:$COMMIT $REPO:travis-$TRAVIS_BUILD_NUMBER

|

||||

docker push $REPO

|

||||

cd docs-importer

|

||||

export REPO=sismics/docs-importer

|

||||

export TAG=`if [ "$TRAVIS_BRANCH" == "master" ]; then echo "latest"; else echo $TRAVIS_BRANCH ; fi`

|

||||

docker build -f Dockerfile -t $REPO:$COMMIT .

|

||||

docker tag $REPO:$COMMIT $REPO:$TAG

|

||||

docker tag $REPO:$COMMIT $REPO:travis-$TRAVIS_BUILD_NUMBER

|

||||

docker push $REPO

|

||||

fi

|

||||

env:

|

||||

global:

|

||||

- secure: LRGpjWORb0qy6VuypZjTAfA8uRHlFUMTwb77cenS9PPRBxuSnctC531asS9Xg3DqC5nsRxBBprgfCKotn5S8nBSD1ceHh84NASyzLSBft3xSMbg7f/2i7MQ+pGVwLncusBU6E/drnMFwZBleo+9M8Tf96axY5zuUp90MUTpSgt0=

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

FROM sismics/ubuntu-jetty:9.4.12

|

||||

MAINTAINER b.gamard@sismics.com

|

||||

|

||||

RUN apt-get update && apt-get -y -q install ffmpeg mediainfo tesseract-ocr tesseract-ocr-fra tesseract-ocr-ita tesseract-ocr-kor tesseract-ocr-rus tesseract-ocr-ukr tesseract-ocr-spa tesseract-ocr-ara tesseract-ocr-hin tesseract-ocr-deu tesseract-ocr-pol tesseract-ocr-jpn tesseract-ocr-por tesseract-ocr-tha tesseract-ocr-jpn tesseract-ocr-chi-sim tesseract-ocr-chi-tra && \

|

||||

RUN apt-get update && apt-get -y -q install ffmpeg mediainfo tesseract-ocr tesseract-ocr-fra tesseract-ocr-ita tesseract-ocr-kor tesseract-ocr-rus tesseract-ocr-ukr tesseract-ocr-spa tesseract-ocr-ara tesseract-ocr-hin tesseract-ocr-deu tesseract-ocr-pol tesseract-ocr-jpn tesseract-ocr-por tesseract-ocr-tha tesseract-ocr-jpn tesseract-ocr-chi-sim tesseract-ocr-chi-tra tesseract-ocr-nld tesseract-ocr-tur tesseract-ocr-heb tesseract-ocr-hun tesseract-ocr-fin tesseract-ocr-swe tesseract-ocr-lav tesseract-ocr-dan && \

|

||||

apt-get clean && rm -rf /var/lib/apt/lists/*

|

||||

|

||||

# Remove the embedded javax.mail jar from Jetty

|

||||

@ -9,3 +9,5 @@ RUN rm -f /opt/jetty/lib/mail/javax.mail.glassfish-*.jar

|

||||

|

||||

ADD docs.xml /opt/jetty/webapps/docs.xml

|

||||

ADD docs-web/target/docs-web-*.war /opt/jetty/webapps/docs.war

|

||||

|

||||

ENV JAVA_OPTIONS -Xmx1g

|

||||

56

README.md

@ -1,27 +1,24 @@

|

||||

<h3 align="center">

|

||||

<img src="https://www.sismicsdocs.com/img/github-title.png" alt="Sismics Docs" width=500 />

|

||||

<img src="https://teedy.io/img/github-title.png" alt="Teedy" width=500 />

|

||||

</h3>

|

||||

|

||||

[](https://twitter.com/sismicsdocs)

|

||||

[](https://www.gnu.org/licenses/old-licenses/gpl-2.0.en.html)

|

||||

[](http://travis-ci.org/sismics/docs)

|

||||

|

||||

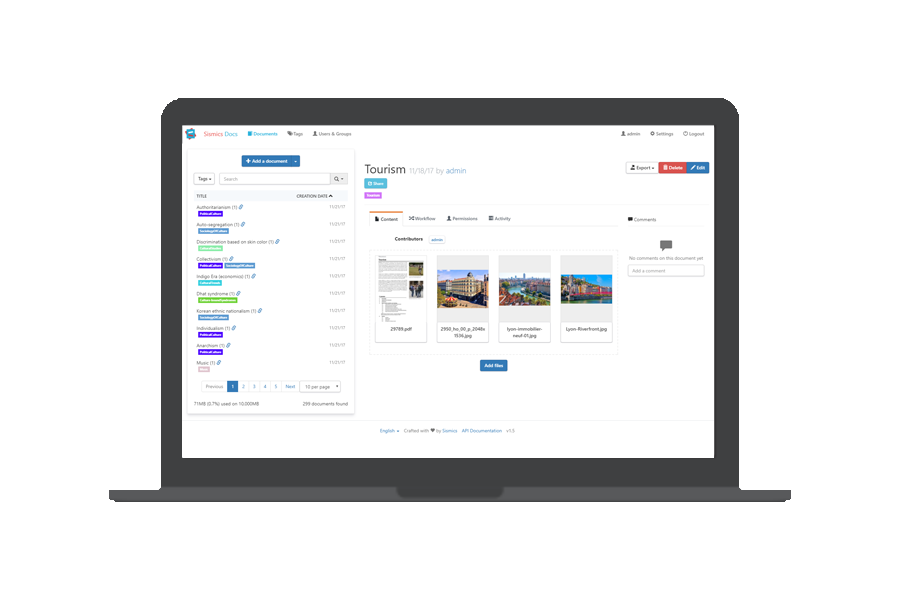

Docs is an open source, lightweight document management system for individuals and businesses.

|

||||

|

||||

**Discuss it on [Product Hunt](https://www.producthunt.com/posts/sismics-docs) 🦄**

|

||||

Teedy is an open source, lightweight document management system for individuals and businesses.

|

||||

|

||||

<hr />

|

||||

<h2 align="center">

|

||||

✨ We just launched a Cloud version of Sismics Docs! Head to <a href="https://www.sismicsdocs.com/">sismicsdocs.com</a> for more informations ✨

|

||||

✨ <a href="https://github.com/users/jendib/sponsorship">Sponsor this project if you use and appreciate it!</a> ✨

|

||||

</h2>

|

||||

<hr />

|

||||

|

||||

|

||||

|

||||

|

||||

Demo

|

||||

----

|

||||

|

||||

A demo is available at [demo.sismicsdocs.com](https://demo.sismicsdocs.com)

|

||||

A demo is available at [demo.teedy.io](https://demo.teedy.io)

|

||||

- Guest login is enabled with read access on all documents

|

||||

- "admin" login with "admin" password

|

||||

- "demo" login with "password" password

|

||||

@ -31,16 +28,19 @@ Features

|

||||

|

||||

- Responsive user interface

|

||||

- Optical character recognition

|

||||

- LDAP authentication

|

||||

- Support image, PDF, ODT, DOCX, PPTX files

|

||||

- Video file support

|

||||

- Video file support

|

||||

- Flexible search engine with suggestions and highlighting

|

||||

- Full text search in all supported files

|

||||

- All [Dublin Core](http://dublincore.org/) metadata

|

||||

- Custom user-defined metadata

|

||||

- Workflow system

|

||||

- 256-bit AES encryption of stored files

|

||||

- File versioning

|

||||

- Tag system with nesting

|

||||

- Import document from email (EML format)

|

||||

- Automatic inbox scanning and importing

|

||||

- Import document from email (EML format)

|

||||

- Automatic inbox scanning and importing

|

||||

- User/group permission system

|

||||

- 2-factor authentication

|

||||

- Hierarchical groups

|

||||

@ -49,22 +49,23 @@ Features

|

||||

- Storage quota per user

|

||||

- Document sharing by URL

|

||||

- RESTful Web API

|

||||

- Webhooks to trigger external service

|

||||

- Webhooks to trigger external service

|

||||

- Fully featured Android client

|

||||

- [Bulk files importer](https://github.com/sismics/docs/tree/master/docs-importer) (single or scan mode)

|

||||

- [Bulk files importer](https://github.com/sismics/docs/tree/master/docs-importer) (single or scan mode)

|

||||

- Tested to one million documents

|

||||

|

||||

Install with Docker

|

||||

-------------------

|

||||

|

||||

From a Docker host, run this command to download and install Sismics Docs. The server will run on <http://[your-docker-host-ip]:8100>.

|

||||

A preconfigured Docker image is available, including OCR and media conversion tools, listening on port 8080. The database is an embedded H2 database but PostgreSQL is also supported for more performance.

|

||||

|

||||

**The default admin password is "admin". Don't forget to change it before going to production.**

|

||||

- Master branch, can be unstable. Not recommended for production use: `sismics/docs:latest`

|

||||

- Latest stable version: `sismics/docs:v1.8`

|

||||

|

||||

docker run --rm --name sismics_docs_latest -d -e DOCS_BASE_URL='http://[your-docker-host-ip]:8100' -p 8100:8080 -v sismics_docs_latest:/data sismics/docs:latest

|

||||

<img src="http://www.newdesignfile.com/postpic/2011/01/green-info-icon_206509.png" width="16px" height="16px"> **Note:** You will need to change [your-docker-host-ip] with the IP address or FQDN of your docker host e.g.

|

||||

The data directory is `/data`. Don't forget to mount a volume on it.

|

||||

|

||||

FQDN: http://docs.sismics.com

|

||||

IP: http://192.168.100.10

|

||||

To build external URL, the server is expecting a `DOCS_BASE_URL` environment variable (for example https://teedy.mycompany.com)

|

||||

|

||||

Manual installation

|

||||

-------------------

|

||||

@ -80,12 +81,12 @@ Manual installation

|

||||

The latest release is downloadable here: <https://github.com/sismics/docs/releases> in WAR format.

|

||||

**The default admin password is "admin". Don't forget to change it before going to production.**

|

||||

|

||||

How to build Docs from the sources

|

||||

How to build Teedy from the sources

|

||||

----------------------------------

|

||||

|

||||

Prerequisites: JDK 8 with JCE, Maven 3, Tesseract 3 or 4

|

||||

Prerequisites: JDK 8 with JCE, Maven 3, NPM, Grunt, Tesseract 3 or 4

|

||||

|

||||

Docs is organized in several Maven modules:

|

||||

Teedy is organized in several Maven modules:

|

||||

|

||||

- docs-core

|

||||

- docs-web

|

||||

@ -121,19 +122,8 @@ All contributions are more than welcomed. Contributions may close an issue, fix

|

||||

|

||||

The `master` branch is the default and base branch for the project. It is used for development and all Pull Requests should go there.

|

||||

|

||||

|

||||

Community

|

||||

---------

|

||||

|

||||

Get updates on Sismics Docs' development and chat with the project maintainers:

|

||||

|

||||

- Follow [@sismicsdocs on Twitter](https://twitter.com/sismicsdocs)

|

||||

- Read and subscribe to [The Official Sismics Docs Blog](https://blog.sismicsdocs.com/)

|

||||

- Check the [Official Website](https://www.sismicsdocs.com)

|

||||

- Join us [on Facebook](https://www.facebook.com/sismicsdocs)

|

||||

|

||||

License

|

||||

-------

|

||||

|

||||

Docs is released under the terms of the GPL license. See `COPYING` for more

|

||||

Teedy is released under the terms of the GPL license. See `COPYING` for more

|

||||

information or see <http://opensource.org/licenses/GPL-2.0>.

|

||||

|

||||

@ -4,7 +4,7 @@ buildscript {

|

||||

google()

|

||||

}

|

||||

dependencies {

|

||||

classpath 'com.android.tools.build:gradle:3.2.1'

|

||||

classpath 'com.android.tools.build:gradle:3.4.0'

|

||||

}

|

||||

}

|

||||

apply plugin: 'com.android.application'

|

||||

|

||||

@ -8,13 +8,14 @@

|

||||

<uses-permission android:name="android.permission.ACCESS_WIFI_STATE" />

|

||||

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

|

||||

<uses-permission android:name="android.permission.WAKE_LOCK" />

|

||||

<uses-permission android:name="android.permission.FOREGROUND_SERVICE" />

|

||||

|

||||

<application

|

||||

android:name=".MainApplication"

|

||||

android:allowBackup="true"

|

||||

android:icon="@mipmap/ic_launcher"

|

||||

android:label="@string/app_name"

|

||||

android:theme="@style/AppTheme" >

|

||||

android:theme="@style/AppTheme">

|

||||

<activity

|

||||

android:name=".activity.LoginActivity"

|

||||

android:label="@string/app_name"

|

||||

|

||||

@ -1,6 +1,7 @@

|

||||

package com.sismics.docs;

|

||||

|

||||

import android.app.Application;

|

||||

import android.support.v7.app.AppCompatDelegate;

|

||||

|

||||

import com.sismics.docs.model.application.ApplicationContext;

|

||||

import com.sismics.docs.util.PreferenceUtil;

|

||||

@ -22,5 +23,7 @@ public class MainApplication extends Application {

|

||||

// TODO Provide documents to intent action get content

|

||||

|

||||

super.onCreate();

|

||||

|

||||

AppCompatDelegate.setDefaultNightMode(AppCompatDelegate.MODE_NIGHT_NO);

|

||||

}

|

||||

}

|

||||

|

||||

@ -52,7 +52,7 @@ public class AuditLogActivity extends AppCompatActivity {

|

||||

}

|

||||

|

||||

// Configure the swipe refresh layout

|

||||

SwipeRefreshLayout swipeRefreshLayout = (SwipeRefreshLayout) findViewById(R.id.swipeRefreshLayout);

|

||||

SwipeRefreshLayout swipeRefreshLayout = findViewById(R.id.swipeRefreshLayout);

|

||||

swipeRefreshLayout.setColorSchemeResources(android.R.color.holo_blue_bright,

|

||||

android.R.color.holo_green_light,

|

||||

android.R.color.holo_orange_light,

|

||||

@ -65,7 +65,7 @@ public class AuditLogActivity extends AppCompatActivity {

|

||||

});

|

||||

|

||||

// Navigate to user profile on click

|

||||

final ListView auditLogListView = (ListView) findViewById(R.id.auditLogListView);

|

||||

final ListView auditLogListView = findViewById(R.id.auditLogListView);

|

||||

auditLogListView.setOnItemClickListener(new AdapterView.OnItemClickListener() {

|

||||

@Override

|

||||

public void onItemClick(AdapterView<?> parent, View view, int position, long id) {

|

||||

@ -88,15 +88,15 @@ public class AuditLogActivity extends AppCompatActivity {

|

||||

* Refresh the view.

|

||||

*/

|

||||

private void refreshView(String documentId) {

|

||||

final SwipeRefreshLayout swipeRefreshLayout = (SwipeRefreshLayout) findViewById(R.id.swipeRefreshLayout);

|

||||

final ProgressBar progressBar = (ProgressBar) findViewById(R.id.progressBar);

|

||||

final ListView auditLogListView = (ListView) findViewById(R.id.auditLogListView);

|

||||

final SwipeRefreshLayout swipeRefreshLayout = findViewById(R.id.swipeRefreshLayout);

|

||||

final ProgressBar progressBar = findViewById(R.id.progressBar);

|

||||

final ListView auditLogListView = findViewById(R.id.auditLogListView);

|

||||

progressBar.setVisibility(View.VISIBLE);

|

||||

auditLogListView.setVisibility(View.GONE);

|

||||

AuditLogResource.list(this, documentId, new HttpCallback() {

|

||||

@Override

|

||||

public void onSuccess(JSONObject response) {

|

||||

auditLogListView.setAdapter(new AuditLogListAdapter(response.optJSONArray("logs")));

|

||||

auditLogListView.setAdapter(new AuditLogListAdapter(AuditLogActivity.this, response.optJSONArray("logs")));

|

||||

}

|

||||

|

||||

@Override

|

||||

|

||||

@ -1,8 +1,6 @@

|

||||

package com.sismics.docs.adapter;

|

||||

|

||||

import android.content.Context;

|

||||

import android.content.Intent;

|

||||

import android.text.TextUtils;

|

||||

import android.text.format.DateFormat;

|

||||

import android.view.LayoutInflater;

|

||||

import android.view.View;

|

||||

@ -30,12 +28,19 @@ public class AuditLogListAdapter extends BaseAdapter {

|

||||

*/

|

||||

private List<JSONObject> logList;

|

||||

|

||||

/**

|

||||

* Context.

|

||||

*/

|

||||

private Context context;

|

||||

|

||||

/**

|

||||

* Audit log list adapter.

|

||||

*

|

||||

* @param context Context

|

||||

* @param logs Logs

|

||||

*/

|

||||

public AuditLogListAdapter(JSONArray logs) {

|

||||

public AuditLogListAdapter(Context context, JSONArray logs) {

|

||||

this.context = context;

|

||||

this.logList = new ArrayList<>();

|

||||

|

||||

for (int i = 0; i < logs.length(); i++) {

|

||||

@ -67,11 +72,21 @@ public class AuditLogListAdapter extends BaseAdapter {

|

||||

|

||||

// Build message

|

||||

final JSONObject log = getItem(position);

|

||||

StringBuilder message = new StringBuilder(log.optString("class"));

|

||||

StringBuilder message = new StringBuilder();

|

||||

|

||||

// Translate entity name

|

||||

int stringId = context.getResources().getIdentifier("auditlog_" + log.optString("class"), "string", context.getPackageName());

|

||||

if (stringId == 0) {

|

||||

message.append(log.optString("class"));

|

||||

} else {

|

||||

message.append(context.getResources().getString(stringId));

|

||||

}

|

||||

message.append(" ");

|

||||

|

||||

switch (log.optString("type")) {

|

||||

case "CREATE": message.append(" created"); break;

|

||||

case "UPDATE": message.append(" updated"); break;

|

||||

case "DELETE": message.append(" deleted"); break;

|

||||

case "CREATE": message.append(context.getResources().getString(R.string.auditlog_created)); break;

|

||||

case "UPDATE": message.append(context.getResources().getString(R.string.auditlog_updated)); break;

|

||||

case "DELETE": message.append(context.getResources().getString(R.string.auditlog_deleted)); break;

|

||||

}

|

||||

switch (log.optString("class")) {

|

||||

case "Document":

|

||||

@ -85,9 +100,9 @@ public class AuditLogListAdapter extends BaseAdapter {

|

||||

}

|

||||

|

||||

// Fill the view

|

||||

TextView usernameTextView = (TextView) view.findViewById(R.id.usernameTextView);

|

||||

TextView messageTextView = (TextView) view.findViewById(R.id.messageTextView);

|

||||

TextView dateTextView = (TextView) view.findViewById(R.id.dateTextView);

|

||||

TextView usernameTextView = view.findViewById(R.id.usernameTextView);

|

||||

TextView messageTextView = view.findViewById(R.id.messageTextView);

|

||||

TextView dateTextView = view.findViewById(R.id.dateTextView);

|

||||

usernameTextView.setText(log.optString("username"));

|

||||

messageTextView.setText(message);

|

||||

String date = DateFormat.getDateFormat(parent.getContext()).format(new Date(log.optLong("create_date")));

|

||||

|

||||

@ -33,6 +33,7 @@ public class LanguageAdapter extends BaseAdapter {

|

||||

}

|

||||

languageList.add(new Language("fra", R.string.language_french, R.drawable.fra));

|

||||

languageList.add(new Language("eng", R.string.language_english, R.drawable.eng));

|

||||

languageList.add(new Language("deu", R.string.language_german, R.drawable.deu));

|

||||

}

|

||||

|

||||

@Override

|

||||

|

||||

@ -63,14 +63,13 @@ public class DocListFragment extends Fragment {

|

||||

recyclerView.setAdapter(adapter);

|

||||

recyclerView.setHasFixedSize(true);

|

||||

recyclerView.setLongClickable(true);

|

||||

recyclerView.addItemDecoration(new DividerItemDecoration(getResources().getDrawable(R.drawable.abc_list_divider_mtrl_alpha)));

|

||||

|

||||

// Configure the LayoutManager

|

||||

final LinearLayoutManager layoutManager = new LinearLayoutManager(getActivity());

|

||||

recyclerView.setLayoutManager(layoutManager);

|

||||

|

||||

// Configure the swipe refresh layout

|

||||

swipeRefreshLayout = (SwipeRefreshLayout) view.findViewById(R.id.swipeRefreshLayout);

|

||||

swipeRefreshLayout = view.findViewById(R.id.swipeRefreshLayout);

|

||||

swipeRefreshLayout.setColorSchemeResources(android.R.color.holo_blue_bright,

|

||||

android.R.color.holo_green_light,

|

||||

android.R.color.holo_orange_light,

|

||||

@ -194,7 +193,7 @@ public class DocListFragment extends Fragment {

|

||||

private void loadDocuments(final View view, final boolean reset) {

|

||||

if (view == null) return;

|

||||

final View progressBar = view.findViewById(R.id.progressBar);

|

||||

final TextView documentsEmptyView = (TextView) view.findViewById(R.id.documentsEmptyView);

|

||||

final TextView documentsEmptyView = view.findViewById(R.id.documentsEmptyView);

|

||||

|

||||

if (reset) {

|

||||

loading = true;

|

||||

|

||||

@ -1,10 +1,12 @@

|

||||

package com.sismics.docs.service;

|

||||

|

||||

import android.app.IntentService;

|

||||

import android.app.NotificationChannel;

|

||||

import android.app.NotificationManager;

|

||||

import android.app.PendingIntent;

|

||||

import android.content.Intent;

|

||||

import android.net.Uri;

|

||||

import android.os.Build;

|

||||

import android.os.PowerManager;

|

||||

import android.support.v4.app.NotificationCompat;

|

||||

import android.support.v4.app.NotificationCompat.Builder;

|

||||

@ -29,7 +31,8 @@ import okhttp3.internal.Util;

|

||||

* @author bgamard

|

||||

*/

|

||||

public class FileUploadService extends IntentService {

|

||||

private static final String TAG = "FileUploadService";

|

||||

private static final String TAG = "sismicsdocs:fileupload";

|

||||

private static final String CHANNEL_ID = "FileUploadService";

|

||||

|

||||

private static final int UPLOAD_NOTIFICATION_ID = 1;

|

||||

private static final int UPLOAD_NOTIFICATION_ID_DONE = 2;

|

||||

@ -49,18 +52,30 @@ public class FileUploadService extends IntentService {

|

||||

super.onCreate();

|

||||

|

||||

notificationManager = (NotificationManager) getSystemService(NOTIFICATION_SERVICE);

|

||||

notification = new NotificationCompat.Builder(this);

|

||||

initChannels();

|

||||

notification = new NotificationCompat.Builder(this, CHANNEL_ID);

|

||||

PowerManager pm = (PowerManager) getSystemService(POWER_SERVICE);

|

||||

wakeLock = pm.newWakeLock(PowerManager.PARTIAL_WAKE_LOCK, TAG);

|

||||

}

|

||||

|

||||

private void initChannels() {

|

||||

if (Build.VERSION.SDK_INT < 26) {

|

||||

return;

|

||||

}

|

||||

|

||||

NotificationChannel channel = new NotificationChannel(CHANNEL_ID,

|

||||

"File Upload", NotificationManager.IMPORTANCE_HIGH);

|

||||

channel.setDescription("Used to show file upload progress");

|

||||

notificationManager.createNotificationChannel(channel);

|

||||

}

|

||||

|

||||

@Override

|

||||

protected void onHandleIntent(Intent intent) {

|

||||

if (intent == null) {

|

||||

return;

|

||||

}

|

||||

|

||||

wakeLock.acquire();

|

||||

wakeLock.acquire(60_000 * 30); // 30 minutes upload time maximum

|

||||

try {

|

||||

onStart();

|

||||

handleFileUpload(intent.getStringExtra(PARAM_DOCUMENT_ID), (Uri) intent.getParcelableExtra(PARAM_URI));

|

||||

@ -77,7 +92,7 @@ public class FileUploadService extends IntentService {

|

||||

*

|

||||

* @param documentId Document ID

|

||||

* @param uri Data URI

|

||||

* @throws IOException

|

||||

* @throws IOException e

|

||||

*/

|

||||

private void handleFileUpload(final String documentId, final Uri uri) throws Exception {

|

||||

final InputStream is = getContentResolver().openInputStream(uri);

|

||||

|

||||

@ -156,7 +156,7 @@ public class OkHttpUtil {

|

||||

public static OkHttpClient buildClient(final Context context) {

|

||||

// One-time header computation

|

||||

if (userAgent == null) {

|

||||

userAgent = "Sismics Docs Android " + ApplicationUtil.getVersionName(context) + "/Android " + Build.VERSION.RELEASE + "/" + Build.MODEL;

|

||||

userAgent = "Teedy Android " + ApplicationUtil.getVersionName(context) + "/Android " + Build.VERSION.RELEASE + "/" + Build.MODEL;

|

||||

}

|

||||

|

||||

if (acceptLanguage == null) {

|

||||

|

||||

@ -39,7 +39,9 @@ public class SearchQueryBuilder {

|

||||

*/

|

||||

public SearchQueryBuilder simpleSearch(String simpleSearch) {

|

||||

if (isValid(simpleSearch)) {

|

||||

query.append(SEARCH_SEPARATOR).append(simpleSearch);

|

||||

query.append(SEARCH_SEPARATOR)

|

||||

.append("simple:")

|

||||

.append(simpleSearch);

|

||||

}

|

||||

return this;

|

||||

}

|

||||

|

||||

BIN

docs-android/app/src/main/res/drawable-xhdpi/deu.png

Normal file

|

After Width: | Height: | Size: 9.1 KiB |

BIN

docs-android/app/src/main/res/drawable-xxhdpi/deu.png

Normal file

|

After Width: | Height: | Size: 20 KiB |

@ -29,7 +29,7 @@

|

||||

android:layout_width="wrap_content"

|

||||

android:layout_height="wrap_content"

|

||||

android:fontFamily="sans-serif-light"

|

||||

android:textColor="#212121"

|

||||

android:textColor="?android:attr/textColorPrimary"

|

||||

android:text="Test"

|

||||

android:textSize="16sp"

|

||||

android:ellipsize="end"

|

||||

@ -46,7 +46,7 @@

|

||||

android:layout_width="wrap_content"

|

||||

android:layout_height="wrap_content"

|

||||

android:fontFamily="sans-serif-light"

|

||||

android:textColor="#777777"

|

||||

android:textColor="?android:attr/textColorPrimary"

|

||||

android:text="test2"

|

||||

android:textSize="16sp"

|

||||

android:maxLines="1"

|

||||

@ -69,7 +69,7 @@

|

||||

android:layout_alignParentEnd="true"

|

||||

android:layout_alignParentRight="true"

|

||||

android:layout_alignParentTop="true"

|

||||

android:textColor="#777777"

|

||||

android:textColor="?android:attr/textColorPrimary"

|

||||

android:fontFamily="sans-serif-light"/>

|

||||

|

||||

</RelativeLayout>

|

||||

@ -9,23 +9,22 @@

|

||||

<android.support.design.widget.CoordinatorLayout

|

||||

xmlns:app="http://schemas.android.com/apk/res-auto"

|

||||

android:id="@+id/overview_coordinator_layout"

|

||||

android:theme="@style/ThemeOverlay.AppCompat.Dark.ActionBar"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="match_parent">

|

||||

|

||||

<android.support.design.widget.AppBarLayout

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content">

|

||||

|

||||

|

||||

<android.support.v7.widget.Toolbar

|

||||

android:id="@+id/toolbar"

|

||||

<android.support.design.widget.AppBarLayout

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="?attr/actionBarSize"

|

||||

app:popupTheme="@style/ThemeOverlay.AppCompat.Light"

|

||||

app:layout_scrollFlags="enterAlways|scroll|snap" />

|

||||

android:layout_height="wrap_content">

|

||||

|

||||

</android.support.design.widget.AppBarLayout>

|

||||

|

||||

<android.support.v7.widget.Toolbar

|

||||

android:id="@+id/toolbar"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="?attr/actionBarSize"

|

||||

app:popupTheme="@style/AppTheme"

|

||||

app:layout_scrollFlags="enterAlways|scroll|snap" />

|

||||

|

||||

</android.support.design.widget.AppBarLayout>

|

||||

|

||||

<fragment

|

||||

android:id="@+id/main_fragment"

|

||||

|

||||

|

Before Width: | Height: | Size: 5.7 KiB After Width: | Height: | Size: 6.3 KiB |

|

Before Width: | Height: | Size: 11 KiB After Width: | Height: | Size: 9.7 KiB |

159

docs-android/app/src/main/res/values-de/strings.xml

Normal file

@ -0,0 +1,159 @@

|

||||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<resources>

|

||||

|

||||

<!-- Validation -->

|

||||

<string name="validate_error_email">Ungültige E-Mail</string>

|

||||

<string name="validate_error_length_min">Zu kurz (min. %d)</string>

|

||||

<string name="validate_error_length_max">Zu lang (max. %d)</string>

|

||||

<string name="validate_error_required">Erforderlich</string>

|

||||

<string name="validate_error_alphanumeric">Nur Buchstaben und Zahlen</string>

|

||||

|

||||

<!-- App -->

|

||||

<string name="drawer_open">Navigationsleiste öffnen</string>

|

||||

<string name="drawer_close">Navigationsleiste schließen</string>

|

||||

<string name="login_explain"><![CDATA[Um zu beginnen, müssen Sie Teedy Server herunterladen und installieren <a href="https://github.com/sismics/docs">github.com/sismics/docs</a>, sowie die Login-Daten unten eingeben]]></string>

|

||||

<string name="server">Server</string>

|

||||

<string name="username">Username</string>

|

||||

<string name="password">Password</string>

|

||||

<string name="login">Login</string>

|

||||

<string name="ok">OK</string>

|

||||

<string name="cancel">Abbrechen</string>

|

||||

<string name="login_fail_title">Login gescheitert</string>

|

||||

<string name="login_fail">Benutzername oder Passwort falsch</string>

|

||||

<string name="network_error_title">Netzwerkfehler</string>

|

||||

<string name="network_error">Netzwerkfehler, überprüfen Sie die Internetverbindung und die Server-URL</string>

|

||||

<string name="invalid_url_title">Ungültige URL</string>

|

||||

<string name="invalid_url">Bitte überprüfen Sie die Server-URL und versuchen Sie es erneut</string>

|

||||

<string name="crash_toast_text">Ein Absturz ist aufgetreten, ein Bericht wurde gesendet, um dieses Problem zu beheben</string>

|

||||

<string name="created_date">Erstellungsdatum</string>

|

||||

<string name="download_file">Aktuelle Datei herunterladen</string>

|

||||

<string name="download_document">Herunterladen</string>

|

||||

<string name="action_search">Dokumente durchsuchen</string>

|

||||

<string name="all_documents">Alle Dokumente</string>

|

||||

<string name="shared_documents">Geteilte Dokumente</string>

|

||||

<string name="all_tags">Alle Tags</string>

|

||||

<string name="no_tags">Keine Tags</string>

|

||||

<string name="error_loading_tags">Fehler beim Laden von Tags</string>

|

||||

<string name="no_documents">Keine Dokumente</string>

|

||||

<string name="error_loading_documents">Fehler beim Laden von Dokumenten</string>

|

||||

<string name="no_files">Keine Dateien</string>

|

||||

<string name="error_loading_files">Fehler beim Laden von Dateien</string>

|

||||

<string name="new_document">Neues Dokument</string>

|

||||

<string name="share">Teilen</string>

|

||||

<string name="close">Schließen</string>

|

||||

<string name="add">Hinzufügen</string>

|

||||

<string name="add_share_hint">Freigabename (optional)</string>

|

||||

<string name="document_not_shared">Dieses Dokument wird derzeit nicht freigegeben</string>

|

||||

<string name="delete_share">Diese Freigabe löschen</string>

|

||||

<string name="send_share">Send this share link</string>

|

||||

<string name="error_loading_shares">Fehler beim Laden von Freigaben</string>

|

||||

<string name="error_adding_share">Fehler beim Hinzufügen der Freigabe</string>

|

||||

<string name="share_default_name">Freigabe Link</string>

|

||||

<string name="error_deleting_share">Fehler beim Löschen der Freigabe</string>

|

||||

<string name="send_share_to">Freigabe senden an</string>

|

||||

<string name="upload_file">Datei hinzufügen</string>

|

||||

<string name="upload_from">Datei hochladen von</string>

|

||||

<string name="settings">Einstellungen</string>

|

||||

<string name="logout">Ausloggen</string>

|

||||

<string name="version">Version</string>

|

||||

<string name="build">Build</string>

|

||||

<string name="pref_advanced_category">Erweiterte Einstellungen</string>

|

||||

<string name="pref_about_category">Über</string>

|

||||

<string name="pref_github">GitHub</string>

|

||||

<string name="pref_issue">Fehler berichten</string>

|

||||

<string name="pref_clear_cache_title">Cache leeren</string>

|

||||

<string name="pref_clear_cache_summary">Zwischengespeicherte Dateien löschen</string>

|

||||

<string name="pref_clear_cache_success">Cache wurde geleert</string>

|

||||

<string name="pref_clear_history_title">Suchhistorie löschen</string>

|

||||

<string name="pref_clear_history_summary">Leert die aktuellen Suchvorschläge</string>

|

||||

<string name="pref_clear_history_success">Suchvorschläge wurden gelöscht</string>

|

||||

<string name="pref_cache_size">Cache Größe</string>

|

||||

<string name="save">Speichern</string>

|

||||

<string name="edit_document">Bearbeiten</string>

|

||||

<string name="error_editing_document">Netzwerkfehler, bitte versuchen Sie es erneut</string>

|

||||

<string name="please_wait">Bitte warten</string>

|

||||

<string name="document_editing_message">Daten werden gesendet</string>

|

||||

<string name="delete_document">Löschen</string>

|

||||

<string name="delete_document_title">Dokument löschen</string>

|

||||

<string name="delete_document_message">Dieses Dokument und alle zugehörigen Dateien wirklich löschen?</string>

|

||||

<string name="document_delete_failure">Netzwerkfehler beim Löschen des Dokuments</string>

|

||||

<string name="document_deleting_message">Lösche Dokument</string>

|

||||

<string name="delete_file_title">Datei löschen</string>

|

||||

<string name="delete_file_message">Die aktuelle Datei wirklich löschen?</string>

|

||||

<string name="file_delete_failure">Netzwerkfehler beim Löschen der Datei</string>

|

||||

<string name="file_deleting_message">Lösche Datei</string>

|

||||

<string name="error_reading_file">Fehler beim Lesen der Datei</string>

|

||||

<string name="upload_notification_title">Teedy</string>

|

||||

<string name="upload_notification_message">Neue Datei in das Dokument hochladen</string>

|

||||

<string name="upload_notification_error">Fehler beim Hochladen der neuen Datei</string>

|

||||

<string name="delete_file">Aktuelle Datei löschen</string>

|

||||

<string name="advanced_search">Erweiterte Suche</string>

|

||||

<string name="search">Suche</string>

|

||||

<string name="add_tags">Tags hinzufügen</string>

|

||||

<string name="creation_date">Erstellungsdatum</string>

|

||||

<string name="description">Beschreibung</string>

|

||||

<string name="title">Titel</string>

|

||||

<string name="simple_search">Einfache Suche</string>

|

||||

<string name="fulltext_search">Volltextsuche</string>

|

||||

<string name="creator">Ersteller</string>

|

||||

<string name="after_date">Nach Datum</string>

|

||||

<string name="before_date">Vor Datum</string>

|

||||

<string name="search_tags">Tags durchsuchen</string>

|

||||

<string name="all_languages">Alle Sprachen</string>

|

||||

<string name="toggle_informations">Informationen anzeigen</string>

|

||||

<string name="who_can_access">Wer kann darauf zugreifen?</string>

|

||||

<string name="comments">Kommentare</string>

|

||||

<string name="no_comments">Keine Kommentare</string>

|

||||

<string name="error_loading_comments">Fehler beim Laden von Kommentaren</string>

|

||||

<string name="send">Senden</string>

|

||||

<string name="add_comment">Kommentar hinzufügen</string>

|

||||

<string name="comment_add_failure">Fehler beim Hinzufügen des Kommentars</string>

|

||||

<string name="adding_comment">Füge Kommentar hinzu</string>

|

||||

<string name="comment_delete">Kommentar löschen</string>

|

||||

<string name="deleting_comment">Lösche Kommentar</string>

|

||||

<string name="error_deleting_comment">Fehler beim Löschen des Kommentars</string>

|

||||

<string name="export_pdf">PDF</string>

|

||||

<string name="download">Download</string>

|

||||

<string name="margin">Rand</string>

|

||||

<string name="fit_image_to_page">Bild an Seite anpassen</string>

|

||||

<string name="export_comments">Kommentare exportieren</string>

|

||||

<string name="export_metadata">Metadaten exportieren</string>

|

||||

<string name="mm">mm</string>

|

||||

<string name="download_file_title">Teedy Datei Export</string>

|

||||

<string name="download_document_title">Teedy Dokumentenexport</string>

|

||||

<string name="download_pdf_title">Teedy PDF Export</string>

|

||||

<string name="latest_activity">Letzte Aktivität</string>

|

||||

<string name="activity">Aktivitäten</string>

|

||||

<string name="email">E-Mail</string>

|

||||

<string name="storage_quota">Speicherbegrenzung</string>

|

||||

<string name="storage_display">%1$d/%2$d MB</string>

|

||||

<string name="validation_code">Validierungscode</string>

|

||||

<string name="shared">Geteilt</string>

|

||||

<string name="language">Sprache</string>

|

||||

<string name="coverage">Geltungsbereich</string>

|

||||

<string name="type">Typ</string>

|

||||

<string name="source">Quelle</string>

|

||||

<string name="format">Format</string>

|

||||

<string name="publisher">Verleger</string>

|

||||

<string name="identifier">Identifikator</string>

|

||||

<string name="subject">Thema</string>

|

||||

<string name="rights">Rechte</string>

|

||||

<string name="contributors">Mitwirkende</string>

|

||||

<string name="relations">Beziehungen</string>

|

||||

|

||||

<!-- Audit log -->

|

||||

<string name="auditlog_Acl">ACL</string>

|

||||

<string name="auditlog_Comment">Kommentar</string>

|

||||

<string name="auditlog_Document">Dokument</string>

|

||||

<string name="auditlog_File">Datei</string>

|

||||

<string name="auditlog_Group">Gruppe</string>

|

||||

<string name="auditlog_Route">Workflow</string>

|

||||

<string name="auditlog_RouteModel">Workflow-Muster</string>

|

||||

<string name="auditlog_Tag">Tag</string>

|

||||

<string name="auditlog_User">Benutzer</string>

|

||||

<string name="auditlog_Webhook">Webhook</string>

|

||||

<string name="auditlog_created">erstellt</string>

|

||||

<string name="auditlog_updated">aktualisiert</string>

|

||||

<string name="auditlog_deleted">gelöscht</string>

|

||||

|

||||

</resources>

|

||||

@ -11,7 +11,7 @@

|

||||

<!-- App -->

|

||||

<string name="drawer_open">Ouvrir le menu de navigation</string>

|

||||

<string name="drawer_close">Fermer le menu de navigation</string>

|

||||

<string name="login_explain"><![CDATA[Pour commencer, vous devez télécharger et installer le serveur Sismics Docs sur <a href="https://github.com/sismics/docs">github.com/sismics/docs</a> et entrer son URL ci-dessous]]></string>

|

||||

<string name="login_explain"><![CDATA[Pour commencer, vous devez télécharger et installer le serveur Teedy sur <a href="https://github.com/sismics/docs">github.com/sismics/docs</a> et entrer son URL ci-dessous]]></string>

|

||||

<string name="server">Serveur</string>

|

||||

<string name="username">Nom d\'utilisateur</string>

|

||||

<string name="password">Mot de passe</string>

|

||||

@ -83,7 +83,7 @@

|

||||

<string name="file_delete_failure">Erreur réseau lors de la suppression du fichier</string>

|

||||

<string name="file_deleting_message">Suppression du fichier</string>

|

||||

<string name="error_reading_file">Erreur lors de la lecture du fichier</string>

|

||||

<string name="upload_notification_title">Sismics Docs</string>

|

||||

<string name="upload_notification_title">Teedy</string>

|

||||

<string name="upload_notification_message">Envoi du nouveau fichier</string>

|

||||

<string name="upload_notification_error">Erreur lors de l\'envoi du nouveau fichier</string>

|

||||

<string name="delete_file">Supprimer ce fichier</string>

|

||||

@ -119,9 +119,9 @@

|

||||

<string name="export_comments">Exporter les commentaires</string>

|

||||

<string name="export_metadata">Exporter les métadonnées</string>

|

||||

<string name="mm">mm</string>

|

||||

<string name="download_file_title">Export de fichier Sismics Docs</string>

|

||||

<string name="download_document_title">Export de document Sismics Docs</string>

|

||||

<string name="download_pdf_title">Export PDF Sismics Docs</string>

|

||||

<string name="download_file_title">Export de fichier Teedy</string>

|

||||

<string name="download_document_title">Export de document Teedy</string>

|

||||

<string name="download_pdf_title">Export PDF Teedy</string>

|

||||

<string name="latest_activity">Activité récente</string>

|

||||

<string name="activity">Activité</string>

|

||||

<string name="email">E-mail</string>

|

||||

@ -141,4 +141,19 @@

|

||||

<string name="contributors">Contributeurs</string>

|

||||

<string name="relations">Relations</string>

|

||||

|

||||

<!-- Audit log -->

|

||||

<string name="auditlog_Acl">ACL</string>

|

||||

<string name="auditlog_Comment">Commentaire</string>

|

||||

<string name="auditlog_Document">Document</string>

|

||||

<string name="auditlog_File">Fichier</string>

|

||||

<string name="auditlog_Group">Groupe</string>

|

||||

<string name="auditlog_Route">Workflow</string>

|

||||

<string name="auditlog_RouteModel">Modèle de workflow</string>

|

||||

<string name="auditlog_Tag">Tag</string>

|

||||

<string name="auditlog_User">Utilisateur</string>

|

||||

<string name="auditlog_Webhook">Webhook</string>

|

||||

<string name="auditlog_created">créé</string>

|

||||

<string name="auditlog_updated">mis à jour</string>

|

||||

<string name="auditlog_deleted">supprimé</string>

|

||||

|

||||

</resources>

|

||||

|

||||

@ -9,10 +9,10 @@

|

||||

<string name="validate_error_alphanumeric">Only letters and numbers</string>

|

||||

|

||||

<!-- App -->

|

||||

<string name="app_name" translatable="false">Sismics Docs</string>

|

||||

<string name="app_name" translatable="false">Teedy</string>

|

||||

<string name="drawer_open">Open navigation drawer</string>

|

||||

<string name="drawer_close">Close navigation drawer</string>

|

||||

<string name="login_explain"><![CDATA[To start, you must download and install Sismics Docs Server on <a href="https://github.com/sismics/docs">github.com/sismics/docs</a> and enter its below]]></string>

|

||||

<string name="login_explain"><![CDATA[To start, you must download and install Teedy Server on <a href="https://github.com/sismics/docs">github.com/sismics/docs</a> and enter its below]]></string>

|

||||

<string name="server">Server</string>

|

||||

<string name="username">Username</string>

|

||||

<string name="password">Password</string>

|

||||

@ -71,6 +71,7 @@

|

||||

<string name="pref_cache_size">Cache size</string>

|

||||

<string name="language_french" translatable="false">Français</string>

|

||||

<string name="language_english" translatable="false">English</string>

|

||||

<string name="language_german" translatable="false">Deutsch</string>

|

||||

<string name="save">Save</string>

|

||||

<string name="edit_document">Edit</string>

|

||||

<string name="error_editing_document">Network error, please try again</string>

|

||||

@ -86,7 +87,7 @@

|

||||

<string name="file_delete_failure">Network error while deleting the current file</string>

|

||||

<string name="file_deleting_message">Deleting file</string>

|

||||

<string name="error_reading_file">Error while reading the file</string>

|

||||

<string name="upload_notification_title">Sismics Docs</string>

|

||||

<string name="upload_notification_title">Teedy</string>

|

||||

<string name="upload_notification_message">Uploading the new file to the document</string>

|

||||

<string name="upload_notification_error">Error uploading the new file</string>

|

||||

<string name="delete_file">Delete current file</string>

|

||||

@ -122,9 +123,9 @@

|

||||

<string name="export_comments">Export comments</string>

|

||||

<string name="export_metadata">Export metadata</string>

|

||||

<string name="mm">mm</string>

|

||||

<string name="download_file_title">Sismics Docs file export</string>

|

||||

<string name="download_document_title">Sismics Docs document export</string>

|

||||

<string name="download_pdf_title">Sismics Docs PDF export</string>

|

||||

<string name="download_file_title">Teedy file export</string>

|

||||

<string name="download_document_title">Teedy document export</string>

|

||||

<string name="download_pdf_title">Teedy PDF export</string>

|

||||

<string name="latest_activity">Latest activity</string>

|

||||

<string name="activity">Activity</string>

|

||||

<string name="email">E-mail</string>

|

||||

@ -144,4 +145,19 @@

|

||||

<string name="contributors">Contributors</string>

|

||||

<string name="relations">Relations</string>

|

||||

|

||||

<!-- Audit log -->

|

||||

<string name="auditlog_Acl">ACL</string>

|

||||

<string name="auditlog_Comment">Comment</string>

|

||||

<string name="auditlog_Document">Document</string>

|

||||

<string name="auditlog_File">File</string>

|

||||

<string name="auditlog_Group">Group</string>

|

||||

<string name="auditlog_Route">Workflow</string>

|

||||

<string name="auditlog_RouteModel">Workflow model</string>

|

||||

<string name="auditlog_Tag">Tag</string>

|

||||

<string name="auditlog_User">User</string>

|

||||

<string name="auditlog_Webhook">Webhook</string>

|

||||

<string name="auditlog_created">created</string>

|

||||

<string name="auditlog_updated">updated</string>

|

||||

<string name="auditlog_deleted">deleted</string>

|

||||

|

||||

</resources>

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

<resources>

|

||||

|

||||

<style name="AppTheme" parent="Theme.AppCompat.Light.DarkActionBar">

|

||||

<style name="AppTheme" parent="Theme.AppCompat.DayNight">

|

||||

<item name="colorPrimary">@color/colorPrimary</item>

|

||||

<item name="colorPrimaryDark">@color/colorPrimaryDark</item>

|

||||

<item name="colorAccent">@color/colorAccent</item>

|

||||

</style>

|

||||

|

||||

<style name="AppTheme.NoActionBar" parent="Theme.AppCompat.Light.DarkActionBar">

|

||||

<style name="AppTheme.NoActionBar" parent="Theme.AppCompat.DayNight.NoActionBar">

|

||||

<item name="windowActionBar">false</item>

|

||||

<item name="windowNoTitle">true</item>

|

||||

<item name="colorPrimary">@color/colorPrimary</item>

|

||||

@ -14,7 +14,7 @@

|

||||

<item name="colorAccent">@color/colorAccent</item>

|

||||

</style>

|

||||

|

||||

<style name="AppThemeDark" parent="Theme.AppCompat.NoActionBar">

|

||||

<style name="AppThemeDark" parent="Theme.AppCompat.DayNight.NoActionBar">

|

||||

<item name="colorPrimary">@color/colorPrimary</item>

|

||||

<item name="colorPrimaryDark">@color/colorPrimaryDark</item>

|

||||

<item name="colorAccent">@color/colorAccent</item>

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

#Thu Oct 18 22:37:49 CEST 2018

|

||||

#Tue May 07 11:49:13 CEST 2019

|

||||

distributionBase=GRADLE_USER_HOME

|

||||

distributionPath=wrapper/dists

|

||||

zipStoreBase=GRADLE_USER_HOME

|

||||

zipStorePath=wrapper/dists

|

||||

distributionUrl=https\://services.gradle.org/distributions/gradle-4.6-all.zip

|

||||

distributionUrl=https\://services.gradle.org/distributions/gradle-5.1.1-all.zip

|

||||

|

||||

@ -5,7 +5,7 @@

|

||||

<parent>

|

||||

<groupId>com.sismics.docs</groupId>

|

||||

<artifactId>docs-parent</artifactId>

|

||||

<version>1.6-SNAPSHOT</version>

|

||||

<version>1.9-SNAPSHOT</version>

|

||||

<relativePath>..</relativePath>

|

||||

</parent>

|

||||

|

||||

@ -132,6 +132,11 @@

|

||||

<artifactId>okhttp</artifactId>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.apache.directory.api</groupId>

|

||||

<artifactId>api-all</artifactId>

|

||||

</dependency>

|

||||

|

||||

<!-- Only there to read old index and rebuild them -->

|

||||

<dependency>

|

||||

<groupId>org.apache.lucene</groupId>

|

||||

@ -190,6 +195,25 @@

|

||||

<artifactId>postgresql</artifactId>

|

||||

</dependency>

|

||||

|

||||

<!-- JDK 11 JAXB dependencies -->

|

||||

<dependency>

|

||||

<groupId>javax.xml.bind</groupId>

|

||||

<artifactId>jaxb-api</artifactId>

|

||||

<version>2.3.0</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>com.sun.xml.bind</groupId>

|

||||

<artifactId>jaxb-core</artifactId>

|

||||

<version>2.3.0</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>com.sun.xml.bind</groupId>

|

||||

<artifactId>jaxb-impl</artifactId>

|

||||

<version>2.3.0</version>

|

||||

</dependency>

|

||||

|

||||

<!-- Test dependencies -->

|

||||

<dependency>

|

||||

<groupId>junit</groupId>

|

||||

|

||||

@ -42,5 +42,20 @@ public enum ConfigType {

|

||||

INBOX_PORT,

|

||||

INBOX_USERNAME,

|

||||

INBOX_PASSWORD,

|

||||

INBOX_TAG

|

||||

INBOX_TAG,

|

||||

INBOX_AUTOMATIC_TAGS,

|

||||

INBOX_DELETE_IMPORTED,

|

||||

|

||||

/**

|

||||

* LDAP connection.

|

||||

*/

|

||||

LDAP_ENABLED,

|

||||

LDAP_HOST,

|

||||

LDAP_PORT,

|

||||

LDAP_ADMIN_DN,

|

||||

LDAP_ADMIN_PASSWORD,

|

||||

LDAP_BASE_DN,

|

||||

LDAP_FILTER,

|

||||

LDAP_DEFAULT_EMAIL,

|

||||

LDAP_DEFAULT_STORAGE

|

||||

}

|

||||

|

||||

@ -38,7 +38,7 @@ public class Constants {

|

||||

/**

|

||||

* Supported document languages.

|

||||

*/

|

||||

public static final List<String> SUPPORTED_LANGUAGES = Lists.newArrayList("eng", "fra", "ita", "deu", "spa", "por", "pol", "rus", "ukr", "ara", "hin", "chi_sim", "chi_tra", "jpn", "tha", "kor");

|

||||

public static final List<String> SUPPORTED_LANGUAGES = Lists.newArrayList("eng", "fra", "ita", "deu", "spa", "por", "pol", "rus", "ukr", "ara", "hin", "chi_sim", "chi_tra", "jpn", "tha", "kor", "nld", "tur", "heb", "hun", "fin", "swe", "lav", "dan");

|

||||

|

||||

/**

|

||||

* Base URL environment variable.

|

||||

|

||||

@ -0,0 +1,14 @@

|

||||

package com.sismics.docs.core.constant;

|

||||

|

||||

/**

|

||||

* Metadata type.

|

||||

*

|

||||

* @author bgamard

|

||||

*/

|

||||

public enum MetadataType {

|

||||

STRING,

|

||||

INTEGER,

|

||||

FLOAT,

|

||||

DATE,

|

||||

BOOLEAN

|

||||

}

|

||||

@ -27,7 +27,6 @@ public class AuditLogDao {

|

||||

*

|

||||

* @param auditLog Audit log

|

||||

* @return New ID

|

||||

* @throws Exception

|

||||

*/

|

||||

public String create(AuditLog auditLog) {

|

||||

// Create the UUID

|

||||

@ -47,10 +46,9 @@ public class AuditLogDao {

|

||||

* @param paginatedList List of audit logs (updated by side effects)

|

||||

* @param criteria Search criteria

|

||||

* @param sortCriteria Sort criteria

|

||||

* @return List of audit logs

|

||||

*/

|

||||

public void findByCriteria(PaginatedList<AuditLogDto> paginatedList, AuditLogCriteria criteria, SortCriteria sortCriteria) {

|

||||

Map<String, Object> parameterMap = new HashMap<String, Object>();

|

||||

Map<String, Object> parameterMap = new HashMap<>();

|

||||

|

||||

StringBuilder baseQuery = new StringBuilder("select l.LOG_ID_C c0, l.LOG_CREATEDATE_D c1, u.USE_USERNAME_C c2, l.LOG_IDENTITY_C c3, l.LOG_CLASSENTITY_C c4, l.LOG_TYPE_C c5, l.LOG_MESSAGE_C c6 from T_AUDIT_LOG l ");

|

||||

baseQuery.append(" join T_USER u on l.LOG_IDUSER_C = u.USE_ID_C ");

|

||||

@ -63,14 +61,20 @@ public class AuditLogDao {

|

||||

queries.add(baseQuery + " where l.LOG_IDENTITY_C in (select f.FIL_ID_C from T_FILE f where f.FIL_IDDOC_C = :documentId) ");

|

||||

queries.add(baseQuery + " where l.LOG_IDENTITY_C in (select c.COM_ID_C from T_COMMENT c where c.COM_IDDOC_C = :documentId) ");

|

||||

queries.add(baseQuery + " where l.LOG_IDENTITY_C in (select a.ACL_ID_C from T_ACL a where a.ACL_SOURCEID_C = :documentId) ");

|

||||

queries.add(baseQuery + " where l.LOG_IDENTITY_C in (select r.RTE_ID_C from T_ROUTE r where r.RTE_IDDOCUMENT_C = :documentId) ");

|

||||

parameterMap.put("documentId", criteria.getDocumentId());

|

||||

}

|

||||

|

||||

if (criteria.getUserId() != null) {

|

||||

// Get all logs originating from the user, not necessarly on owned items

|

||||

// Filter out ACL logs

|

||||

queries.add(baseQuery + " where l.LOG_IDUSER_C = :userId and l.LOG_CLASSENTITY_C != 'Acl' ");

|

||||

parameterMap.put("userId", criteria.getUserId());

|

||||

if (criteria.isAdmin()) {

|

||||

// For admin users, display all logs except ACL logs

|

||||

queries.add(baseQuery + " where l.LOG_CLASSENTITY_C != 'Acl' ");

|

||||

} else {

|

||||

// Get all logs originating from the user, not necessarly on owned items

|

||||

// Filter out ACL logs

|

||||

queries.add(baseQuery + " where l.LOG_IDUSER_C = :userId and l.LOG_CLASSENTITY_C != 'Acl' ");

|

||||

parameterMap.put("userId", criteria.getUserId());

|

||||

}

|

||||

}

|

||||

|

||||

// Perform the search

|

||||

@ -78,7 +82,7 @@ public class AuditLogDao {

|

||||

List<Object[]> l = PaginatedLists.executePaginatedQuery(paginatedList, queryParam, sortCriteria);

|

||||

|

||||

// Assemble results

|

||||

List<AuditLogDto> auditLogDtoList = new ArrayList<AuditLogDto>();

|

||||

List<AuditLogDto> auditLogDtoList = new ArrayList<>();

|

||||

for (Object[] o : l) {

|

||||

int i = 0;

|

||||

AuditLogDto auditLogDto = new AuditLogDto();

|

||||

|

||||

@ -27,7 +27,6 @@ public class CommentDao {

|

||||

* @param comment Comment

|

||||

* @param userId User ID

|

||||

* @return New ID

|

||||

* @throws Exception

|

||||

*/

|

||||

public String create(Comment comment, String userId) {

|

||||

// Create the UUID

|

||||

@ -99,7 +98,7 @@ public class CommentDao {

|

||||

@SuppressWarnings("unchecked")

|

||||

List<Object[]> l = q.getResultList();

|

||||

|

||||

List<CommentDto> commentDtoList = new ArrayList<CommentDto>();

|

||||

List<CommentDto> commentDtoList = new ArrayList<>();

|

||||

for (Object[] o : l) {

|

||||

int i = 0;

|

||||

CommentDto commentDto = new CommentDto();

|

||||

@ -107,7 +106,7 @@ public class CommentDao {

|

||||

commentDto.setContent((String) o[i++]);

|

||||

commentDto.setCreateTimestamp(((Timestamp) o[i++]).getTime());

|

||||

commentDto.setCreatorName((String) o[i++]);

|

||||

commentDto.setCreatorEmail((String) o[i++]);

|

||||

commentDto.setCreatorEmail((String) o[i]);

|

||||

commentDtoList.add(commentDto);

|

||||

}

|

||||

return commentDtoList;

|

||||

|

||||

@ -56,7 +56,7 @@ public class ContributorDao {

|

||||

@SuppressWarnings("unchecked")

|

||||

public List<ContributorDto> getByDocumentId(String documentId) {

|

||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||

StringBuilder sb = new StringBuilder("select u.USE_USERNAME_C, u.USE_EMAIL_C from T_CONTRIBUTOR c ");

|

||||

StringBuilder sb = new StringBuilder("select distinct u.USE_USERNAME_C, u.USE_EMAIL_C from T_CONTRIBUTOR c ");

|

||||

sb.append(" join T_USER u on u.USE_ID_C = c.CTR_IDUSER_C ");

|

||||

sb.append(" where c.CTR_IDDOC_C = :documentId ");

|

||||

Query q = em.createNativeQuery(sb.toString());

|

||||

|

||||

@ -46,12 +46,16 @@ public class DocumentDao {

|

||||

/**

|

||||

* Returns the list of all active documents.

|

||||

*

|

||||

* @param offset Offset

|

||||

* @param limit Limit

|

||||

* @return List of documents

|

||||

*/

|

||||

@SuppressWarnings("unchecked")

|

||||

public List<Document> findAll() {

|

||||

public List<Document> findAll(int offset, int limit) {

|

||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||

Query q = em.createQuery("select d from Document d where d.deleteDate is null");

|

||||

q.setFirstResult(offset);

|

||||

q.setMaxResults(limit);

|

||||

return q.getResultList();

|

||||

}

|

||||

|

||||

@ -185,7 +189,7 @@ public class DocumentDao {

|

||||

}

|

||||

|

||||

/**

|

||||

* Update a document.

|

||||

* Update a document and log the action.

|

||||

*

|

||||

* @param document Document to update

|

||||

* @param userId User ID

|

||||

@ -212,6 +216,7 @@ public class DocumentDao {

|

||||

documentDb.setRights(document.getRights());

|

||||

documentDb.setCreateDate(document.getCreateDate());

|

||||

documentDb.setLanguage(document.getLanguage());

|

||||

documentDb.setFileId(document.getFileId());

|

||||

documentDb.setUpdateDate(new Date());

|

||||

|

||||

// Create audit log

|

||||

@ -220,6 +225,21 @@ public class DocumentDao {

|

||||

return documentDb;

|

||||

}

|

||||

|

||||

/**

|

||||

* Update the file ID on a document.

|

||||

*

|

||||

* @param document Document

|

||||

*/

|

||||

public void updateFileId(Document document) {

|

||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||

Query query = em.createNativeQuery("update T_DOCUMENT d set DOC_IDFILE_C = :fileId, DOC_UPDATEDATE_D = :updateDate where d.DOC_ID_C = :id");

|

||||

query.setParameter("updateDate", new Date());

|

||||

query.setParameter("fileId", document.getFileId());

|

||||

query.setParameter("id", document.getId());

|

||||

query.executeUpdate();

|

||||

|

||||

}

|

||||

|

||||

/**

|

||||

* Returns the number of documents.

|

||||

*

|

||||

|

||||

@ -0,0 +1,89 @@

|

||||

package com.sismics.docs.core.dao;

|

||||

|

||||

import com.sismics.docs.core.constant.MetadataType;

|

||||

import com.sismics.docs.core.dao.dto.DocumentMetadataDto;

|

||||

import com.sismics.docs.core.model.jpa.DocumentMetadata;

|

||||

import com.sismics.util.context.ThreadLocalContext;

|

||||

|

||||

import javax.persistence.EntityManager;

|

||||

import javax.persistence.Query;

|

||||

import java.util.ArrayList;

|

||||

import java.util.List;

|

||||

import java.util.UUID;

|

||||

|

||||

/**

|

||||

* Document metadata DAO.

|

||||

*

|

||||

* @author bgamard

|

||||

*/

|

||||

public class DocumentMetadataDao {

|

||||

/**

|

||||

* Creates a new document metadata.

|

||||

*

|

||||

* @param documentMetadata Document metadata

|

||||

* @return New ID

|

||||

*/

|

||||

public String create(DocumentMetadata documentMetadata) {

|

||||

// Create the UUID

|

||||

documentMetadata.setId(UUID.randomUUID().toString());

|

||||

|

||||

// Create the document metadata

|

||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||

em.persist(documentMetadata);

|

||||

|

||||

return documentMetadata.getId();

|

||||

}

|

||||

|

||||

/**

|

||||

* Updates a document metadata.

|

||||

*

|

||||

* @param documentMetadata Document metadata

|

||||

* @return Updated document metadata

|

||||

*/

|

||||

public DocumentMetadata update(DocumentMetadata documentMetadata) {

|

||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||

|

||||

// Get the document metadata

|

||||

Query q = em.createQuery("select u from DocumentMetadata u where u.id = :id");

|

||||

q.setParameter("id", documentMetadata.getId());

|

||||

DocumentMetadata documentMetadataDb = (DocumentMetadata) q.getSingleResult();

|

||||

|

||||

// Update the document metadata

|

||||

documentMetadataDb.setValue(documentMetadata.getValue());

|

||||

|

||||

return documentMetadata;

|

||||

}

|

||||

|

||||

/**

|

||||

* Returns the list of all metadata values on a document.

|

||||

*

|

||||

* @param documentId Document ID

|

||||

* @return List of metadata

|

||||

*/

|

||||

@SuppressWarnings("unchecked")

|

||||

public List<DocumentMetadataDto> getByDocumentId(String documentId) {

|

||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||

StringBuilder sb = new StringBuilder("select dm.DME_ID_C, dm.DME_IDDOCUMENT_C, dm.DME_IDMETADATA_C, dm.DME_VALUE_C, m.MET_TYPE_C");

|

||||

sb.append(" from T_DOCUMENT_METADATA dm, T_METADATA m ");

|

||||

sb.append(" where dm.DME_IDMETADATA_C = m.MET_ID_C and dm.DME_IDDOCUMENT_C = :documentId and m.MET_DELETEDATE_D is null");

|

||||

|

||||

// Perform the search

|

||||

Query q = em.createNativeQuery(sb.toString());

|

||||

q.setParameter("documentId", documentId);

|

||||

List<Object[]> l = q.getResultList();

|

||||

|

||||

// Assemble results

|

||||

List<DocumentMetadataDto> dtoList = new ArrayList<>();

|

||||

for (Object[] o : l) {

|

||||

int i = 0;

|

||||

DocumentMetadataDto dto = new DocumentMetadataDto();

|

||||

dto.setId((String) o[i++]);

|

||||

dto.setDocumentId((String) o[i++]);

|

||||

dto.setMetadataId((String) o[i++]);

|

||||

dto.setValue((String) o[i++]);

|

||||

dto.setType(MetadataType.valueOf((String) o[i]));

|

||||

dtoList.add(dto);

|

||||

}

|

||||

return dtoList;

|

||||

}

|

||||

}

|

||||

@ -43,12 +43,16 @@ public class FileDao {

|

||||

/**

|

||||

* Returns the list of all files.

|

||||

*

|

||||

* @param offset Offset

|

||||

* @param limit Limit

|

||||

* @return List of files

|

||||

*/

|

||||

@SuppressWarnings("unchecked")

|

||||

public List<File> findAll() {

|

||||