mirror of

https://github.com/sismics/docs.git

synced 2025-04-19 09:56:36 +02:00

Compare commits

78 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c2d7f3ebc6 | ||

|

|

8f1ff56d34 | ||

|

|

11ae0ea7d3 | ||

|

|

afa78857f9 | ||

|

|

ae2423b2e9 | ||

|

|

01d3e746d8 | ||

|

|

13cd03a762 | ||

|

|

ac7b3c4eb9 | ||

|

|

7effbc8de0 | ||

|

|

8c5f0c78e7 | ||

|

|

45e00ac93d | ||

|

|

80454afc0d | ||

|

|

428e898a7a | ||

|

|

13762eb67f | ||

|

|

04c43ebf7b | ||

|

|

f9b5a5212d | ||

|

|

0351f94761 | ||

|

|

a89543b555 | ||

|

|

ce30b1a6ff | ||

|

|

1b382004cb | ||

|

|

ab7ff25929 | ||

|

|

eedf19ad9d | ||

|

|

941ace99c6 | ||

|

|

95e0b870f6 | ||

|

|

2bdb2dc34f | ||

|

|

22a44d0c8d | ||

|

|

a9cdbdc03e | ||

|

|

3fd5470eae | ||

| 39f96cbd28 | |||

| 4501f10429 | |||

| bd0cde7e87 | |||

|

|

dd36e08d7d | ||

|

|

4634def93e | ||

|

|

1974a8bb8d | ||

|

|

e9a6609593 | ||

|

|

b20577026e | ||

|

|

dae9e137f7 | ||

|

|

1509d0c5bb | ||

| 430ebbd1c5 | |||

|

|

b561eaee6d | ||

|

|

1aa21c3762 | ||

|

|

c8a67177d8 | ||

|

|

59597e962d | ||

|

|

c85a951a9e | ||

|

|

7f47a17633 | ||

|

|

690c961a55 | ||

|

|

21efd1e4a7 | ||

|

|

ad27228429 | ||

|

|

dd4a1667ca | ||

|

|

399d2b7951 | ||

|

|

d51dfd6636 | ||

|

|

ca85c1fa9f | ||

|

|

5e7f06070e | ||

|

|

dc0c20cd0c | ||

|

|

98aa33341a | ||

|

|

1f7c0afc1e | ||

|

|

1ccce3f942 | ||

|

|

90d5bc8de7 | ||

|

|

c6a685d7c0 | ||

|

|

e6cfd899e5 | ||

|

|

bd23f14792 | ||

|

|

46f6b9e537 | ||

|

|

d5832c48e1 | ||

|

|

64ec0f63ca | ||

|

|

0b7c42e814 | ||

|

|

d8dc63fc98 | ||

|

|

81a7f154c2 | ||

|

|

af3263d471 | ||

|

|

bbe5f19997 | ||

|

|

f33650c099 | ||

|

|

58f81ec851 | ||

|

|

c9262eb204 | ||

|

|

3637b832e5 | ||

|

|

ee56cfe2b4 | ||

|

|

721410c7d0 | ||

|

|

f0310e3933 | ||

|

|

302d7cccc4 | ||

|

|

f9977d5ce6 |

84

.github/workflows/build-deploy.yml

vendored

Normal file

84

.github/workflows/build-deploy.yml

vendored

Normal file

@ -0,0 +1,84 @@

|

|||||||

|

name: Maven CI/CD

|

||||||

|

|

||||||

|

on:

|

||||||

|

push:

|

||||||

|

branches: [master]

|

||||||

|

tags: [v*]

|

||||||

|

workflow_dispatch:

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

build_and_publish:

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v2

|

||||||

|

- name: Set up JDK 11

|

||||||

|

uses: actions/setup-java@v2

|

||||||

|

with:

|

||||||

|

java-version: "11"

|

||||||

|

distribution: "temurin"

|

||||||

|

cache: maven

|

||||||

|

- name: Install test dependencies

|

||||||

|

run: sudo apt-get update && sudo apt-get -y -q --no-install-recommends install ffmpeg mediainfo tesseract-ocr tesseract-ocr-deu

|

||||||

|

- name: Build with Maven

|

||||||

|

run: mvn --batch-mode -Pprod clean install

|

||||||

|

- name: Upload war artifact

|

||||||

|

uses: actions/upload-artifact@v2

|

||||||

|

with:

|

||||||

|

name: docs-web-ci.war

|

||||||

|

path: docs-web/target/docs*.war

|

||||||

|

|

||||||

|

build_docker_image:

|

||||||

|

name: Publish to Docker Hub

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

needs: [build_and_publish]

|

||||||

|

|

||||||

|

steps:

|

||||||

|

-

|

||||||

|

name: Checkout

|

||||||

|

uses: actions/checkout@v2

|

||||||

|

-

|

||||||

|

name: Download war artifact

|

||||||

|

uses: actions/download-artifact@v2

|

||||||

|

with:

|

||||||

|

name: docs-web-ci.war

|

||||||

|

path: docs-web/target

|

||||||

|

-

|

||||||

|

name: Setup up Docker Buildx

|

||||||

|

uses: docker/setup-buildx-action@v1

|

||||||

|

-

|

||||||

|

name: Login to DockerHub

|

||||||

|

if: github.event_name != 'pull_request'

|

||||||

|

uses: docker/login-action@v1

|

||||||

|

with:

|

||||||

|

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||||

|

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||||

|

-

|

||||||

|

name: Populate Docker metadata

|

||||||

|

id: metadata

|

||||||

|

uses: docker/metadata-action@v3

|

||||||

|

with:

|

||||||

|

images: sismics/docs

|

||||||

|

flavor: |

|

||||||

|

latest=false

|

||||||

|

tags: |

|

||||||

|

type=ref,event=tag

|

||||||

|

type=raw,value=latest,enable=${{ github.ref_type != 'tag' }}

|

||||||

|

labels: |

|

||||||

|

org.opencontainers.image.title = Teedy

|

||||||

|

org.opencontainers.image.description = Teedy is an open source, lightweight document management system for individuals and businesses.

|

||||||

|

org.opencontainers.image.created = ${{ github.event_created_at }}

|

||||||

|

org.opencontainers.image.author = Sismics

|

||||||

|

org.opencontainers.image.url = https://teedy.io/

|

||||||

|

org.opencontainers.image.vendor = Sismics

|

||||||

|

org.opencontainers.image.license = GPLv2

|

||||||

|

org.opencontainers.image.version = ${{ github.event_head_commit.id }}

|

||||||

|

-

|

||||||

|

name: Build and push

|

||||||

|

id: docker_build

|

||||||

|

uses: docker/build-push-action@v2

|

||||||

|

with:

|

||||||

|

context: .

|

||||||

|

push: ${{ github.event_name != 'pull_request' }}

|

||||||

|

tags: ${{ steps.metadata.outputs.tags }}

|

||||||

|

labels: ${{ steps.metadata.outputs.labels }}

|

||||||

7

.gitignore

vendored

7

.gitignore

vendored

@ -13,4 +13,9 @@ node_modules

|

|||||||

import_test

|

import_test

|

||||||

teedy-importer-linux

|

teedy-importer-linux

|

||||||

teedy-importer-macos

|

teedy-importer-macos

|

||||||

teedy-importer-win.exe

|

teedy-importer-win.exe

|

||||||

|

docs/*

|

||||||

|

!docs/.gitkeep

|

||||||

|

|

||||||

|

#macos

|

||||||

|

.DS_Store

|

||||||

|

|||||||

49

Dockerfile

49

Dockerfile

@ -1,12 +1,26 @@

|

|||||||

FROM sismics/ubuntu-jetty:9.4.36

|

FROM ubuntu:22.04

|

||||||

LABEL maintainer="b.gamard@sismics.com"

|

LABEL maintainer="b.gamard@sismics.com"

|

||||||

|

|

||||||

|

# Run Debian in non interactive mode

|

||||||

|

ENV DEBIAN_FRONTEND noninteractive

|

||||||

|

|

||||||

|

# Configure env

|

||||||

|

ENV LANG C.UTF-8

|

||||||

|

ENV LC_ALL C.UTF-8

|

||||||

|

ENV JAVA_HOME /usr/lib/jvm/java-11-openjdk-amd64/

|

||||||

|

ENV JAVA_OPTIONS -Dfile.encoding=UTF-8 -Xmx1g

|

||||||

|

ENV JETTY_VERSION 11.0.20

|

||||||

|

ENV JETTY_HOME /opt/jetty

|

||||||

|

|

||||||

|

# Install packages

|

||||||

RUN apt-get update && \

|

RUN apt-get update && \

|

||||||

apt-get -y -q --no-install-recommends install \

|

apt-get -y -q --no-install-recommends install \

|

||||||

|

vim less procps unzip wget tzdata openjdk-11-jdk \

|

||||||

ffmpeg \

|

ffmpeg \

|

||||||

mediainfo \

|

mediainfo \

|

||||||

tesseract-ocr \

|

tesseract-ocr \

|

||||||

tesseract-ocr-ara \

|

tesseract-ocr-ara \

|

||||||

|

tesseract-ocr-ces \

|

||||||

tesseract-ocr-chi-sim \

|

tesseract-ocr-chi-sim \

|

||||||

tesseract-ocr-chi-tra \

|

tesseract-ocr-chi-tra \

|

||||||

tesseract-ocr-dan \

|

tesseract-ocr-dan \

|

||||||

@ -30,13 +44,32 @@ RUN apt-get update && \

|

|||||||

tesseract-ocr-tha \

|

tesseract-ocr-tha \

|

||||||

tesseract-ocr-tur \

|

tesseract-ocr-tur \

|

||||||

tesseract-ocr-ukr \

|

tesseract-ocr-ukr \

|

||||||

tesseract-ocr-vie && \

|

tesseract-ocr-vie \

|

||||||

apt-get clean && rm -rf /var/lib/apt/lists/*

|

tesseract-ocr-sqi \

|

||||||

|

&& apt-get clean && \

|

||||||

|

rm -rf /var/lib/apt/lists/*

|

||||||

|

RUN dpkg-reconfigure -f noninteractive tzdata

|

||||||

|

|

||||||

# Remove the embedded javax.mail jar from Jetty

|

# Install Jetty

|

||||||

RUN rm -f /opt/jetty/lib/mail/javax.mail.glassfish-*.jar

|

RUN wget -nv -O /tmp/jetty.tar.gz \

|

||||||

|

"https://repo1.maven.org/maven2/org/eclipse/jetty/jetty-home/${JETTY_VERSION}/jetty-home-${JETTY_VERSION}.tar.gz" \

|

||||||

|

&& tar xzf /tmp/jetty.tar.gz -C /opt \

|

||||||

|

&& mv /opt/jetty* /opt/jetty \

|

||||||

|

&& useradd jetty -U -s /bin/false \

|

||||||

|

&& chown -R jetty:jetty /opt/jetty \

|

||||||

|

&& mkdir /opt/jetty/webapps \

|

||||||

|

&& chmod +x /opt/jetty/bin/jetty.sh

|

||||||

|

|

||||||

ADD docs.xml /opt/jetty/webapps/docs.xml

|

EXPOSE 8080

|

||||||

ADD docs-web/target/docs-web-*.war /opt/jetty/webapps/docs.war

|

|

||||||

|

|

||||||

ENV JAVA_OPTIONS -Xmx1g

|

# Install app

|

||||||

|

RUN mkdir /app && \

|

||||||

|

cd /app && \

|

||||||

|

java -jar /opt/jetty/start.jar --add-modules=server,http,webapp,deploy

|

||||||

|

|

||||||

|

ADD docs.xml /app/webapps/docs.xml

|

||||||

|

ADD docs-web/target/docs-web-*.war /app/webapps/docs.war

|

||||||

|

|

||||||

|

WORKDIR /app

|

||||||

|

|

||||||

|

CMD ["java", "-jar", "/opt/jetty/start.jar"]

|

||||||

|

|||||||

105

README.md

105

README.md

@ -3,6 +3,7 @@

|

|||||||

</h3>

|

</h3>

|

||||||

|

|

||||||

[](https://www.gnu.org/licenses/old-licenses/gpl-2.0.en.html)

|

[](https://www.gnu.org/licenses/old-licenses/gpl-2.0.en.html)

|

||||||

|

[](https://github.com/sismics/docs/actions/workflows/build-deploy.yml)

|

||||||

|

|

||||||

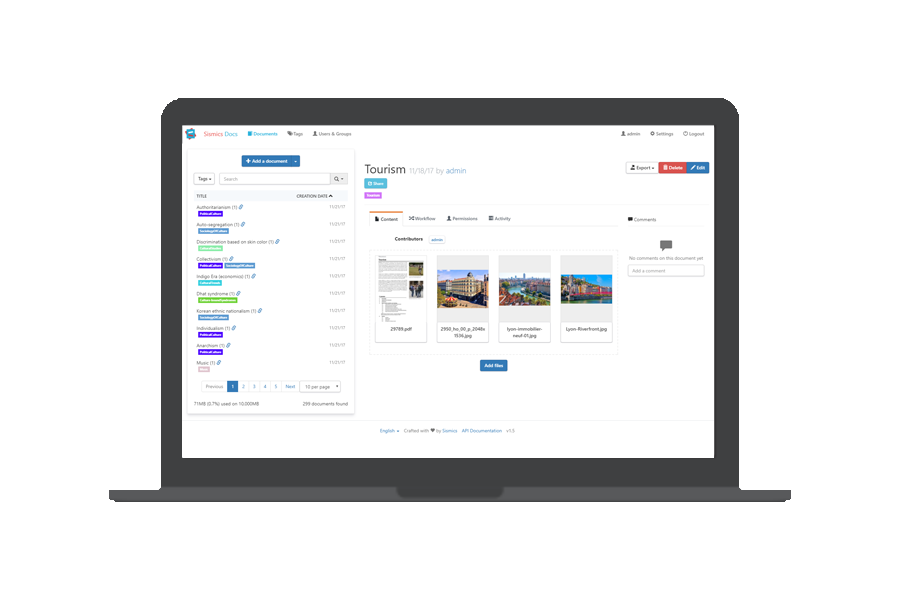

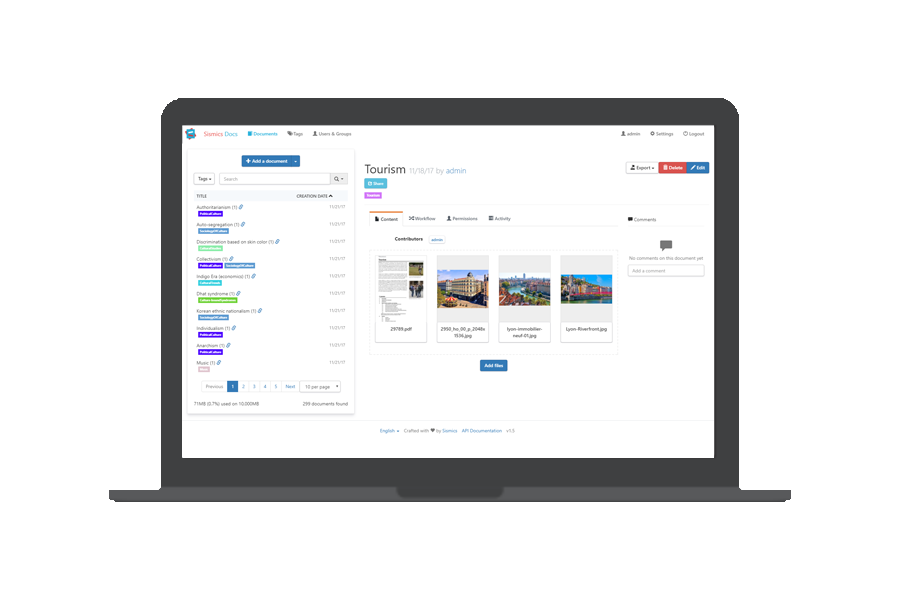

Teedy is an open source, lightweight document management system for individuals and businesses.

|

Teedy is an open source, lightweight document management system for individuals and businesses.

|

||||||

|

|

||||||

@ -14,8 +15,7 @@ Teedy is an open source, lightweight document management system for individuals

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

Demo

|

# Demo

|

||||||

----

|

|

||||||

|

|

||||||

A demo is available at [demo.teedy.io](https://demo.teedy.io)

|

A demo is available at [demo.teedy.io](https://demo.teedy.io)

|

||||||

|

|

||||||

@ -23,8 +23,7 @@ A demo is available at [demo.teedy.io](https://demo.teedy.io)

|

|||||||

- "admin" login with "admin" password

|

- "admin" login with "admin" password

|

||||||

- "demo" login with "password" password

|

- "demo" login with "password" password

|

||||||

|

|

||||||

Features

|

# Features

|

||||||

--------

|

|

||||||

|

|

||||||

- Responsive user interface

|

- Responsive user interface

|

||||||

- Optical character recognition

|

- Optical character recognition

|

||||||

@ -54,21 +53,20 @@ Features

|

|||||||

- [Bulk files importer](https://github.com/sismics/docs/tree/master/docs-importer) (single or scan mode)

|

- [Bulk files importer](https://github.com/sismics/docs/tree/master/docs-importer) (single or scan mode)

|

||||||

- Tested to one million documents

|

- Tested to one million documents

|

||||||

|

|

||||||

Install with Docker

|

# Install with Docker

|

||||||

-------------------

|

|

||||||

|

|

||||||

A preconfigured Docker image is available, including OCR and media conversion tools, listening on port 8080. The database is an embedded H2 database but PostgreSQL is also supported for more performance.

|

A preconfigured Docker image is available, including OCR and media conversion tools, listening on port 8080. If no PostgreSQL config is provided, the database is an embedded H2 database. The H2 embedded database should only be used for testing. For production usage use the provided PostgreSQL configuration (check the Docker Compose example)

|

||||||

|

|

||||||

**The default admin password is "admin". Don't forget to change it before going to production.**

|

**The default admin password is "admin". Don't forget to change it before going to production.**

|

||||||

|

|

||||||

- Master branch, can be unstable. Not recommended for production use: `sismics/docs:latest`

|

- Master branch, can be unstable. Not recommended for production use: `sismics/docs:latest`

|

||||||

- Latest stable version: `sismics/docs:v1.10`

|

- Latest stable version: `sismics/docs:v1.11`

|

||||||

|

|

||||||

The data directory is `/data`. Don't forget to mount a volume on it.

|

The data directory is `/data`. Don't forget to mount a volume on it.

|

||||||

|

|

||||||

To build external URL, the server is expecting a `DOCS_BASE_URL` environment variable (for example https://teedy.mycompany.com)

|

To build external URL, the server is expecting a `DOCS_BASE_URL` environment variable (for example https://teedy.mycompany.com)

|

||||||

|

|

||||||

### Available environment variables

|

## Available environment variables

|

||||||

|

|

||||||

- General

|

- General

|

||||||

- `DOCS_BASE_URL`: The base url used by the application. Generated url's will be using this as base.

|

- `DOCS_BASE_URL`: The base url used by the application. Generated url's will be using this as base.

|

||||||

@ -83,6 +81,7 @@ To build external URL, the server is expecting a `DOCS_BASE_URL` environment var

|

|||||||

- `DATABASE_URL`: The jdbc connection string to be used by `hibernate`.

|

- `DATABASE_URL`: The jdbc connection string to be used by `hibernate`.

|

||||||

- `DATABASE_USER`: The user which should be used for the database connection.

|

- `DATABASE_USER`: The user which should be used for the database connection.

|

||||||

- `DATABASE_PASSWORD`: The password to be used for the database connection.

|

- `DATABASE_PASSWORD`: The password to be used for the database connection.

|

||||||

|

- `DATABASE_POOL_SIZE`: The pool size to be used for the database connection.

|

||||||

|

|

||||||

- Language

|

- Language

|

||||||

- `DOCS_DEFAULT_LANGUAGE`: The language which will be used as default. Currently supported values are:

|

- `DOCS_DEFAULT_LANGUAGE`: The language which will be used as default. Currently supported values are:

|

||||||

@ -94,41 +93,19 @@ To build external URL, the server is expecting a `DOCS_BASE_URL` environment var

|

|||||||

- `DOCS_SMTP_USERNAME`: The username to be used.

|

- `DOCS_SMTP_USERNAME`: The username to be used.

|

||||||

- `DOCS_SMTP_PASSWORD`: The password to be used.

|

- `DOCS_SMTP_PASSWORD`: The password to be used.

|

||||||

|

|

||||||

### Examples

|

## Examples

|

||||||

|

|

||||||

In the following examples some passwords are exposed in cleartext. This was done in order to keep the examples simple. We strongly encourage you to use variables with an `.env` file or other means to securely store your passwords.

|

In the following examples some passwords are exposed in cleartext. This was done in order to keep the examples simple. We strongly encourage you to use variables with an `.env` file or other means to securely store your passwords.

|

||||||

|

|

||||||

#### Using the internal database

|

|

||||||

|

### Default, using PostgreSQL

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

version: '3'

|

version: '3'

|

||||||

services:

|

services:

|

||||||

# Teedy Application

|

# Teedy Application

|

||||||

teedy-server:

|

teedy-server:

|

||||||

image: sismics/docs:v1.10

|

image: sismics/docs:v1.11

|

||||||

restart: unless-stopped

|

|

||||||

ports:

|

|

||||||

# Map internal port to host

|

|

||||||

- 8080:8080

|

|

||||||

environment:

|

|

||||||

# Base url to be used

|

|

||||||

DOCS_BASE_URL: "https://docs.example.com"

|

|

||||||

# Set the admin email

|

|

||||||

DOCS_ADMIN_EMAIL_INIT: "admin@example.com"

|

|

||||||

# Set the admin password (in this example: "superSecure")

|

|

||||||

DOCS_ADMIN_PASSWORD_INIT: "$$2a$$05$$PcMNUbJvsk7QHFSfEIDaIOjk1VI9/E7IPjTKx.jkjPxkx2EOKSoPS"

|

|

||||||

volumes:

|

|

||||||

- ./docs/data:/data

|

|

||||||

```

|

|

||||||

|

|

||||||

#### Using PostgreSQL

|

|

||||||

|

|

||||||

```yaml

|

|

||||||

version: '3'

|

|

||||||

services:

|

|

||||||

# Teedy Application

|

|

||||||

teedy-server:

|

|

||||||

image: sismics/docs:v1.10

|

|

||||||

restart: unless-stopped

|

restart: unless-stopped

|

||||||

ports:

|

ports:

|

||||||

# Map internal port to host

|

# Map internal port to host

|

||||||

@ -146,6 +123,7 @@ services:

|

|||||||

DATABASE_URL: "jdbc:postgresql://teedy-db:5432/teedy"

|

DATABASE_URL: "jdbc:postgresql://teedy-db:5432/teedy"

|

||||||

DATABASE_USER: "teedy_db_user"

|

DATABASE_USER: "teedy_db_user"

|

||||||

DATABASE_PASSWORD: "teedy_db_password"

|

DATABASE_PASSWORD: "teedy_db_password"

|

||||||

|

DATABASE_POOL_SIZE: "10"

|

||||||

volumes:

|

volumes:

|

||||||

- ./docs/data:/data

|

- ./docs/data:/data

|

||||||

networks:

|

networks:

|

||||||

@ -179,10 +157,32 @@ networks:

|

|||||||

driver: bridge

|

driver: bridge

|

||||||

```

|

```

|

||||||

|

|

||||||

Manual installation

|

### Using the internal database (only for testing)

|

||||||

-------------------

|

|

||||||

|

|

||||||

#### Requirements

|

```yaml

|

||||||

|

version: '3'

|

||||||

|

services:

|

||||||

|

# Teedy Application

|

||||||

|

teedy-server:

|

||||||

|

image: sismics/docs:v1.11

|

||||||

|

restart: unless-stopped

|

||||||

|

ports:

|

||||||

|

# Map internal port to host

|

||||||

|

- 8080:8080

|

||||||

|

environment:

|

||||||

|

# Base url to be used

|

||||||

|

DOCS_BASE_URL: "https://docs.example.com"

|

||||||

|

# Set the admin email

|

||||||

|

DOCS_ADMIN_EMAIL_INIT: "admin@example.com"

|

||||||

|

# Set the admin password (in this example: "superSecure")

|

||||||

|

DOCS_ADMIN_PASSWORD_INIT: "$$2a$$05$$PcMNUbJvsk7QHFSfEIDaIOjk1VI9/E7IPjTKx.jkjPxkx2EOKSoPS"

|

||||||

|

volumes:

|

||||||

|

- ./docs/data:/data

|

||||||

|

```

|

||||||

|

|

||||||

|

# Manual installation

|

||||||

|

|

||||||

|

## Requirements

|

||||||

|

|

||||||

- Java 11

|

- Java 11

|

||||||

- Tesseract 4 for OCR

|

- Tesseract 4 for OCR

|

||||||

@ -190,13 +190,12 @@ Manual installation

|

|||||||

- mediainfo for video metadata extraction

|

- mediainfo for video metadata extraction

|

||||||

- A webapp server like [Jetty](http://eclipse.org/jetty/) or [Tomcat](http://tomcat.apache.org/)

|

- A webapp server like [Jetty](http://eclipse.org/jetty/) or [Tomcat](http://tomcat.apache.org/)

|

||||||

|

|

||||||

#### Download

|

## Download

|

||||||

|

|

||||||

The latest release is downloadable here: <https://github.com/sismics/docs/releases> in WAR format.

|

The latest release is downloadable here: <https://github.com/sismics/docs/releases> in WAR format.

|

||||||

**The default admin password is "admin". Don't forget to change it before going to production.**

|

**The default admin password is "admin". Don't forget to change it before going to production.**

|

||||||

|

|

||||||

How to build Teedy from the sources

|

## How to build Teedy from the sources

|

||||||

----------------------------------

|

|

||||||

|

|

||||||

Prerequisites: JDK 11, Maven 3, NPM, Grunt, Tesseract 4

|

Prerequisites: JDK 11, Maven 3, NPM, Grunt, Tesseract 4

|

||||||

|

|

||||||

@ -209,35 +208,39 @@ Teedy is organized in several Maven modules:

|

|||||||

First off, clone the repository: `git clone git://github.com/sismics/docs.git`

|

First off, clone the repository: `git clone git://github.com/sismics/docs.git`

|

||||||

or download the sources from GitHub.

|

or download the sources from GitHub.

|

||||||

|

|

||||||

#### Launch the build

|

### Launch the build

|

||||||

|

|

||||||

From the root directory:

|

From the root directory:

|

||||||

|

|

||||||

mvn clean -DskipTests install

|

```console

|

||||||

|

mvn clean -DskipTests install

|

||||||

|

```

|

||||||

|

|

||||||

#### Run a stand-alone version

|

### Run a stand-alone version

|

||||||

|

|

||||||

From the `docs-web` directory:

|

From the `docs-web` directory:

|

||||||

|

|

||||||

mvn jetty:run

|

```console

|

||||||

|

mvn jetty:run

|

||||||

|

```

|

||||||

|

|

||||||

#### Build a .war to deploy to your servlet container

|

### Build a .war to deploy to your servlet container

|

||||||

|

|

||||||

From the `docs-web` directory:

|

From the `docs-web` directory:

|

||||||

|

|

||||||

mvn -Pprod -DskipTests clean install

|

```console

|

||||||

|

mvn -Pprod -DskipTests clean install

|

||||||

|

```

|

||||||

|

|

||||||

You will get your deployable WAR in the `docs-web/target` directory.

|

You will get your deployable WAR in the `docs-web/target` directory.

|

||||||

|

|

||||||

Contributing

|

# Contributing

|

||||||

------------

|

|

||||||

|

|

||||||

All contributions are more than welcomed. Contributions may close an issue, fix a bug (reported or not reported), improve the existing code, add new feature, and so on.

|

All contributions are more than welcomed. Contributions may close an issue, fix a bug (reported or not reported), improve the existing code, add new feature, and so on.

|

||||||

|

|

||||||

The `master` branch is the default and base branch for the project. It is used for development and all Pull Requests should go there.

|

The `master` branch is the default and base branch for the project. It is used for development and all Pull Requests should go there.

|

||||||

|

|

||||||

License

|

# License

|

||||||

-------

|

|

||||||

|

|

||||||

Teedy is released under the terms of the GPL license. See `COPYING` for more

|

Teedy is released under the terms of the GPL license. See `COPYING` for more

|

||||||

information or see <http://opensource.org/licenses/GPL-2.0>.

|

information or see <http://opensource.org/licenses/GPL-2.0>.

|

||||||

|

|||||||

18

docker-compose.yml

Normal file

18

docker-compose.yml

Normal file

@ -0,0 +1,18 @@

|

|||||||

|

version: '3'

|

||||||

|

services:

|

||||||

|

# Teedy Application

|

||||||

|

teedy-server:

|

||||||

|

image: sismics/docs:v1.10

|

||||||

|

restart: unless-stopped

|

||||||

|

ports:

|

||||||

|

# Map internal port to host

|

||||||

|

- 8080:8080

|

||||||

|

environment:

|

||||||

|

# Base url to be used

|

||||||

|

DOCS_BASE_URL: "https://docs.example.com"

|

||||||

|

# Set the admin email

|

||||||

|

DOCS_ADMIN_EMAIL_INIT: "admin@example.com"

|

||||||

|

# Set the admin password (in this example: "superSecure")

|

||||||

|

DOCS_ADMIN_PASSWORD_INIT: "$$2a$$05$$PcMNUbJvsk7QHFSfEIDaIOjk1VI9/E7IPjTKx.jkjPxkx2EOKSoPS"

|

||||||

|

volumes:

|

||||||

|

- ./docs/data:/data

|

||||||

@ -5,10 +5,10 @@

|

|||||||

<parent>

|

<parent>

|

||||||

<groupId>com.sismics.docs</groupId>

|

<groupId>com.sismics.docs</groupId>

|

||||||

<artifactId>docs-parent</artifactId>

|

<artifactId>docs-parent</artifactId>

|

||||||

<version>1.10</version>

|

<version>1.12-SNAPSHOT</version>

|

||||||

<relativePath>..</relativePath>

|

<relativePath>../pom.xml</relativePath>

|

||||||

</parent>

|

</parent>

|

||||||

|

|

||||||

<modelVersion>4.0.0</modelVersion>

|

<modelVersion>4.0.0</modelVersion>

|

||||||

<artifactId>docs-core</artifactId>

|

<artifactId>docs-core</artifactId>

|

||||||

<packaging>jar</packaging>

|

<packaging>jar</packaging>

|

||||||

@ -17,20 +17,10 @@

|

|||||||

<dependencies>

|

<dependencies>

|

||||||

<!-- Persistence layer dependencies -->

|

<!-- Persistence layer dependencies -->

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.hibernate</groupId>

|

<groupId>org.hibernate.orm</groupId>

|

||||||

<artifactId>hibernate-core</artifactId>

|

<artifactId>hibernate-core</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.hibernate</groupId>

|

|

||||||

<artifactId>hibernate-entitymanager</artifactId>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.hibernate</groupId>

|

|

||||||

<artifactId>hibernate-c3p0</artifactId>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<!-- Other external dependencies -->

|

<!-- Other external dependencies -->

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>joda-time</groupId>

|

<groupId>joda-time</groupId>

|

||||||

@ -41,30 +31,30 @@

|

|||||||

<groupId>com.google.guava</groupId>

|

<groupId>com.google.guava</groupId>

|

||||||

<artifactId>guava</artifactId>

|

<artifactId>guava</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.commons</groupId>

|

<groupId>org.apache.commons</groupId>

|

||||||

<artifactId>commons-compress</artifactId>

|

<artifactId>commons-compress</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>commons-lang</groupId>

|

<groupId>org.apache.commons</groupId>

|

||||||

<artifactId>commons-lang</artifactId>

|

<artifactId>commons-lang3</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.commons</groupId>

|

<groupId>org.apache.commons</groupId>

|

||||||

<artifactId>commons-email</artifactId>

|

<artifactId>commons-email</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.freemarker</groupId>

|

<groupId>org.freemarker</groupId>

|

||||||

<artifactId>freemarker</artifactId>

|

<artifactId>freemarker</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.glassfish</groupId>

|

<groupId>jakarta.json</groupId>

|

||||||

<artifactId>javax.json</artifactId>

|

<artifactId>jakarta.json-api</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

@ -76,17 +66,17 @@

|

|||||||

<groupId>log4j</groupId>

|

<groupId>log4j</groupId>

|

||||||

<artifactId>log4j</artifactId>

|

<artifactId>log4j</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.slf4j</groupId>

|

<groupId>org.slf4j</groupId>

|

||||||

<artifactId>slf4j-log4j12</artifactId>

|

<artifactId>slf4j-log4j12</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.slf4j</groupId>

|

<groupId>org.slf4j</groupId>

|

||||||

<artifactId>slf4j-api</artifactId>

|

<artifactId>slf4j-api</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.slf4j</groupId>

|

<groupId>org.slf4j</groupId>

|

||||||

<artifactId>jcl-over-slf4j</artifactId>

|

<artifactId>jcl-over-slf4j</artifactId>

|

||||||

@ -96,17 +86,17 @@

|

|||||||

<groupId>at.favre.lib</groupId>

|

<groupId>at.favre.lib</groupId>

|

||||||

<artifactId>bcrypt</artifactId>

|

<artifactId>bcrypt</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.lucene</groupId>

|

<groupId>org.apache.lucene</groupId>

|

||||||

<artifactId>lucene-core</artifactId>

|

<artifactId>lucene-core</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.lucene</groupId>

|

<groupId>org.apache.lucene</groupId>

|

||||||

<artifactId>lucene-analyzers-common</artifactId>

|

<artifactId>lucene-analyzers-common</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.lucene</groupId>

|

<groupId>org.apache.lucene</groupId>

|

||||||

<artifactId>lucene-queryparser</artifactId>

|

<artifactId>lucene-queryparser</artifactId>

|

||||||

@ -122,11 +112,6 @@

|

|||||||

<artifactId>lucene-highlighter</artifactId>

|

<artifactId>lucene-highlighter</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>com.sun.mail</groupId>

|

|

||||||

<artifactId>javax.mail</artifactId>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>com.squareup.okhttp3</groupId>

|

<groupId>com.squareup.okhttp3</groupId>

|

||||||

<artifactId>okhttp</artifactId>

|

<artifactId>okhttp</artifactId>

|

||||||

@ -134,7 +119,12 @@

|

|||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.directory.api</groupId>

|

<groupId>org.apache.directory.api</groupId>

|

||||||

<artifactId>api-all</artifactId>

|

<artifactId>api-ldap-client-api</artifactId>

|

||||||

|

</dependency>

|

||||||

|

|

||||||

|

<dependency>

|

||||||

|

<groupId>org.apache.directory.api</groupId>

|

||||||

|

<artifactId>api-ldap-codec-standalone</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<!-- Only there to read old index and rebuild them -->

|

<!-- Only there to read old index and rebuild them -->

|

||||||

@ -142,22 +132,22 @@

|

|||||||

<groupId>org.apache.lucene</groupId>

|

<groupId>org.apache.lucene</groupId>

|

||||||

<artifactId>lucene-backward-codecs</artifactId>

|

<artifactId>lucene-backward-codecs</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.imgscalr</groupId>

|

<groupId>org.imgscalr</groupId>

|

||||||

<artifactId>imgscalr-lib</artifactId>

|

<artifactId>imgscalr-lib</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.pdfbox</groupId>

|

<groupId>org.apache.pdfbox</groupId>

|

||||||

<artifactId>pdfbox</artifactId>

|

<artifactId>pdfbox</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.bouncycastle</groupId>

|

<groupId>org.bouncycastle</groupId>

|

||||||

<artifactId>bcprov-jdk15on</artifactId>

|

<artifactId>bcprov-jdk15on</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>fr.opensagres.xdocreport</groupId>

|

<groupId>fr.opensagres.xdocreport</groupId>

|

||||||

<artifactId>fr.opensagres.odfdom.converter.pdf</artifactId>

|

<artifactId>fr.opensagres.odfdom.converter.pdf</artifactId>

|

||||||

@ -195,39 +185,20 @@

|

|||||||

<artifactId>postgresql</artifactId>

|

<artifactId>postgresql</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<!-- JDK 11 JAXB dependencies -->

|

|

||||||

<dependency>

|

|

||||||

<groupId>javax.xml.bind</groupId>

|

|

||||||

<artifactId>jaxb-api</artifactId>

|

|

||||||

<version>2.3.0</version>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>com.sun.xml.bind</groupId>

|

|

||||||

<artifactId>jaxb-core</artifactId>

|

|

||||||

<version>2.3.0</version>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>com.sun.xml.bind</groupId>

|

|

||||||

<artifactId>jaxb-impl</artifactId>

|

|

||||||

<version>2.3.0</version>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<!-- Test dependencies -->

|

<!-- Test dependencies -->

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>junit</groupId>

|

<groupId>junit</groupId>

|

||||||

<artifactId>junit</artifactId>

|

<artifactId>junit</artifactId>

|

||||||

<scope>test</scope>

|

<scope>test</scope>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>com.h2database</groupId>

|

<groupId>com.h2database</groupId>

|

||||||

<artifactId>h2</artifactId>

|

<artifactId>h2</artifactId>

|

||||||

<scope>test</scope>

|

<scope>test</scope>

|

||||||

</dependency>

|

</dependency>

|

||||||

</dependencies>

|

</dependencies>

|

||||||

|

|

||||||

<profiles>

|

<profiles>

|

||||||

<!-- Development profile (active by default) -->

|

<!-- Development profile (active by default) -->

|

||||||

<profile>

|

<profile>

|

||||||

@ -239,7 +210,7 @@

|

|||||||

<value>dev</value>

|

<value>dev</value>

|

||||||

</property>

|

</property>

|

||||||

</activation>

|

</activation>

|

||||||

|

|

||||||

<build>

|

<build>

|

||||||

<resources>

|

<resources>

|

||||||

<resource>

|

<resource>

|

||||||

@ -255,7 +226,7 @@

|

|||||||

<id>prod</id>

|

<id>prod</id>

|

||||||

</profile>

|

</profile>

|

||||||

</profiles>

|

</profiles>

|

||||||

|

|

||||||

<build>

|

<build>

|

||||||

<resources>

|

<resources>

|

||||||

<resource>

|

<resource>

|

||||||

|

|||||||

@ -1,9 +1,9 @@

|

|||||||

package com.sismics.docs.core.constant;

|

package com.sismics.docs.core.constant;

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Configuration parameters.

|

* Configuration parameters.

|

||||||

*

|

*

|

||||||

* @author jtremeaux

|

* @author jtremeaux

|

||||||

*/

|

*/

|

||||||

public enum ConfigType {

|

public enum ConfigType {

|

||||||

/**

|

/**

|

||||||

@ -20,6 +20,11 @@ public enum ConfigType {

|

|||||||

*/

|

*/

|

||||||

GUEST_LOGIN,

|

GUEST_LOGIN,

|

||||||

|

|

||||||

|

/**

|

||||||

|

* OCR enabled.

|

||||||

|

*/

|

||||||

|

OCR_ENABLED,

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Default language.

|

* Default language.

|

||||||

*/

|

*/

|

||||||

@ -40,6 +45,7 @@ public enum ConfigType {

|

|||||||

INBOX_ENABLED,

|

INBOX_ENABLED,

|

||||||

INBOX_HOSTNAME,

|

INBOX_HOSTNAME,

|

||||||

INBOX_PORT,

|

INBOX_PORT,

|

||||||

|

INBOX_STARTTLS,

|

||||||

INBOX_USERNAME,

|

INBOX_USERNAME,

|

||||||

INBOX_PASSWORD,

|

INBOX_PASSWORD,

|

||||||

INBOX_FOLDER,

|

INBOX_FOLDER,

|

||||||

@ -53,6 +59,7 @@ public enum ConfigType {

|

|||||||

LDAP_ENABLED,

|

LDAP_ENABLED,

|

||||||

LDAP_HOST,

|

LDAP_HOST,

|

||||||

LDAP_PORT,

|

LDAP_PORT,

|

||||||

|

LDAP_USESSL,

|

||||||

LDAP_ADMIN_DN,

|

LDAP_ADMIN_DN,

|

||||||

LDAP_ADMIN_PASSWORD,

|

LDAP_ADMIN_PASSWORD,

|

||||||

LDAP_BASE_DN,

|

LDAP_BASE_DN,

|

||||||

|

|||||||

@ -43,7 +43,7 @@ public class Constants {

|

|||||||

/**

|

/**

|

||||||

* Supported document languages.

|

* Supported document languages.

|

||||||

*/

|

*/

|

||||||

public static final List<String> SUPPORTED_LANGUAGES = Lists.newArrayList("eng", "fra", "ita", "deu", "spa", "por", "pol", "rus", "ukr", "ara", "hin", "chi_sim", "chi_tra", "jpn", "tha", "kor", "nld", "tur", "heb", "hun", "fin", "swe", "lav", "dan", "nor", "vie");

|

public static final List<String> SUPPORTED_LANGUAGES = Lists.newArrayList("eng", "fra", "ita", "deu", "spa", "por", "pol", "rus", "ukr", "ara", "hin", "chi_sim", "chi_tra", "jpn", "tha", "kor", "nld", "tur", "heb", "hun", "fin", "swe", "lav", "dan", "nor", "vie", "ces", "sqi");

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Base URL environment variable.

|

* Base URL environment variable.

|

||||||

|

|||||||

@ -10,8 +10,8 @@ import com.sismics.docs.core.util.AuditLogUtil;

|

|||||||

import com.sismics.docs.core.util.SecurityUtil;

|

import com.sismics.docs.core.util.SecurityUtil;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import javax.persistence.Query;

|

import jakarta.persistence.Query;

|

||||||

import java.util.ArrayList;

|

import java.util.ArrayList;

|

||||||

import java.util.Date;

|

import java.util.Date;

|

||||||

import java.util.List;

|

import java.util.List;

|

||||||

|

|||||||

@ -12,7 +12,7 @@ import com.sismics.docs.core.util.jpa.QueryParam;

|

|||||||

import com.sismics.docs.core.util.jpa.SortCriteria;

|

import com.sismics.docs.core.util.jpa.SortCriteria;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import java.sql.Timestamp;

|

import java.sql.Timestamp;

|

||||||

import java.util.*;

|

import java.util.*;

|

||||||

|

|

||||||

|

|||||||

@ -4,8 +4,8 @@ import com.sismics.docs.core.model.jpa.AuthenticationToken;

|

|||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

import org.joda.time.DateTime;

|

import org.joda.time.DateTime;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import javax.persistence.Query;

|

import jakarta.persistence.Query;

|

||||||

import java.util.Date;

|

import java.util.Date;

|

||||||

import java.util.List;

|

import java.util.List;

|

||||||

import java.util.UUID;

|

import java.util.UUID;

|

||||||

|

|||||||

@ -6,9 +6,9 @@ import com.sismics.docs.core.model.jpa.Comment;

|

|||||||

import com.sismics.docs.core.util.AuditLogUtil;

|

import com.sismics.docs.core.util.AuditLogUtil;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import javax.persistence.NoResultException;

|

import jakarta.persistence.NoResultException;

|

||||||

import javax.persistence.Query;

|

import jakarta.persistence.Query;

|

||||||

import java.sql.Timestamp;

|

import java.sql.Timestamp;

|

||||||

import java.util.ArrayList;

|

import java.util.ArrayList;

|

||||||

import java.util.Date;

|

import java.util.Date;

|

||||||

|

|||||||

@ -4,8 +4,8 @@ import com.sismics.docs.core.constant.ConfigType;

|

|||||||

import com.sismics.docs.core.model.jpa.Config;

|

import com.sismics.docs.core.model.jpa.Config;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import javax.persistence.NoResultException;

|

import jakarta.persistence.NoResultException;

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Configuration parameter DAO.

|

* Configuration parameter DAO.

|

||||||

|

|||||||

@ -4,8 +4,8 @@ import com.sismics.docs.core.dao.dto.ContributorDto;

|

|||||||

import com.sismics.docs.core.model.jpa.Contributor;

|

import com.sismics.docs.core.model.jpa.Contributor;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import javax.persistence.Query;

|

import jakarta.persistence.Query;

|

||||||

import java.util.ArrayList;

|

import java.util.ArrayList;

|

||||||

import java.util.List;

|

import java.util.List;

|

||||||

import java.util.UUID;

|

import java.util.UUID;

|

||||||

|

|||||||

@ -7,9 +7,10 @@ import com.sismics.docs.core.model.jpa.Document;

|

|||||||

import com.sismics.docs.core.util.AuditLogUtil;

|

import com.sismics.docs.core.util.AuditLogUtil;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import javax.persistence.NoResultException;

|

import jakarta.persistence.NoResultException;

|

||||||

import javax.persistence.Query;

|

import jakarta.persistence.Query;

|

||||||

|

import jakarta.persistence.TypedQuery;

|

||||||

import java.sql.Timestamp;

|

import java.sql.Timestamp;

|

||||||

import java.util.Date;

|

import java.util.Date;

|

||||||

import java.util.List;

|

import java.util.List;

|

||||||

@ -50,10 +51,9 @@ public class DocumentDao {

|

|||||||

* @param limit Limit

|

* @param limit Limit

|

||||||

* @return List of documents

|

* @return List of documents

|

||||||

*/

|

*/

|

||||||

@SuppressWarnings("unchecked")

|

|

||||||

public List<Document> findAll(int offset, int limit) {

|

public List<Document> findAll(int offset, int limit) {

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

Query q = em.createQuery("select d from Document d where d.deleteDate is null");

|

TypedQuery<Document> q = em.createQuery("select d from Document d where d.deleteDate is null", Document.class);

|

||||||

q.setFirstResult(offset);

|

q.setFirstResult(offset);

|

||||||

q.setMaxResults(limit);

|

q.setMaxResults(limit);

|

||||||

return q.getResultList();

|

return q.getResultList();

|

||||||

@ -65,10 +65,9 @@ public class DocumentDao {

|

|||||||

* @param userId User ID

|

* @param userId User ID

|

||||||

* @return List of documents

|

* @return List of documents

|

||||||

*/

|

*/

|

||||||

@SuppressWarnings("unchecked")

|

|

||||||

public List<Document> findByUserId(String userId) {

|

public List<Document> findByUserId(String userId) {

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

Query q = em.createQuery("select d from Document d where d.userId = :userId and d.deleteDate is null");

|

TypedQuery<Document> q = em.createQuery("select d from Document d where d.userId = :userId and d.deleteDate is null", Document.class);

|

||||||

q.setParameter("userId", userId);

|

q.setParameter("userId", userId);

|

||||||

return q.getResultList();

|

return q.getResultList();

|

||||||

}

|

}

|

||||||

@ -88,7 +87,7 @@ public class DocumentDao {

|

|||||||

}

|

}

|

||||||

|

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

StringBuilder sb = new StringBuilder("select distinct d.DOC_ID_C, d.DOC_TITLE_C, d.DOC_DESCRIPTION_C, d.DOC_SUBJECT_C, d.DOC_IDENTIFIER_C, d.DOC_PUBLISHER_C, d.DOC_FORMAT_C, d.DOC_SOURCE_C, d.DOC_TYPE_C, d.DOC_COVERAGE_C, d.DOC_RIGHTS_C, d.DOC_CREATEDATE_D, d.DOC_UPDATEDATE_D, d.DOC_LANGUAGE_C, ");

|

StringBuilder sb = new StringBuilder("select distinct d.DOC_ID_C, d.DOC_TITLE_C, d.DOC_DESCRIPTION_C, d.DOC_SUBJECT_C, d.DOC_IDENTIFIER_C, d.DOC_PUBLISHER_C, d.DOC_FORMAT_C, d.DOC_SOURCE_C, d.DOC_TYPE_C, d.DOC_COVERAGE_C, d.DOC_RIGHTS_C, d.DOC_CREATEDATE_D, d.DOC_UPDATEDATE_D, d.DOC_LANGUAGE_C, d.DOC_IDFILE_C,");

|

||||||

sb.append(" (select count(s.SHA_ID_C) from T_SHARE s, T_ACL ac where ac.ACL_SOURCEID_C = d.DOC_ID_C and ac.ACL_TARGETID_C = s.SHA_ID_C and ac.ACL_DELETEDATE_D is null and s.SHA_DELETEDATE_D is null) shareCount, ");

|

sb.append(" (select count(s.SHA_ID_C) from T_SHARE s, T_ACL ac where ac.ACL_SOURCEID_C = d.DOC_ID_C and ac.ACL_TARGETID_C = s.SHA_ID_C and ac.ACL_DELETEDATE_D is null and s.SHA_DELETEDATE_D is null) shareCount, ");

|

||||||

sb.append(" (select count(f.FIL_ID_C) from T_FILE f where f.FIL_DELETEDATE_D is null and f.FIL_IDDOC_C = d.DOC_ID_C) fileCount, ");

|

sb.append(" (select count(f.FIL_ID_C) from T_FILE f where f.FIL_DELETEDATE_D is null and f.FIL_IDDOC_C = d.DOC_ID_C) fileCount, ");

|

||||||

sb.append(" u.USE_USERNAME_C ");

|

sb.append(" u.USE_USERNAME_C ");

|

||||||

@ -122,6 +121,7 @@ public class DocumentDao {

|

|||||||

documentDto.setCreateTimestamp(((Timestamp) o[i++]).getTime());

|

documentDto.setCreateTimestamp(((Timestamp) o[i++]).getTime());

|

||||||

documentDto.setUpdateTimestamp(((Timestamp) o[i++]).getTime());

|

documentDto.setUpdateTimestamp(((Timestamp) o[i++]).getTime());

|

||||||

documentDto.setLanguage((String) o[i++]);

|

documentDto.setLanguage((String) o[i++]);

|

||||||

|

documentDto.setFileId((String) o[i++]);

|

||||||

documentDto.setShared(((Number) o[i++]).intValue() > 0);

|

documentDto.setShared(((Number) o[i++]).intValue() > 0);

|

||||||

documentDto.setFileCount(((Number) o[i++]).intValue());

|

documentDto.setFileCount(((Number) o[i++]).intValue());

|

||||||

documentDto.setCreator((String) o[i]);

|

documentDto.setCreator((String) o[i]);

|

||||||

@ -138,16 +138,16 @@ public class DocumentDao {

|

|||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

|

|

||||||

// Get the document

|

// Get the document

|

||||||

Query q = em.createQuery("select d from Document d where d.id = :id and d.deleteDate is null");

|

TypedQuery<Document> dq = em.createQuery("select d from Document d where d.id = :id and d.deleteDate is null", Document.class);

|

||||||

q.setParameter("id", id);

|

dq.setParameter("id", id);

|

||||||

Document documentDb = (Document) q.getSingleResult();

|

Document documentDb = dq.getSingleResult();

|

||||||

|

|

||||||

// Delete the document

|

// Delete the document

|

||||||

Date dateNow = new Date();

|

Date dateNow = new Date();

|

||||||

documentDb.setDeleteDate(dateNow);

|

documentDb.setDeleteDate(dateNow);

|

||||||

|

|

||||||

// Delete linked data

|

// Delete linked data

|

||||||

q = em.createQuery("update File f set f.deleteDate = :dateNow where f.documentId = :documentId and f.deleteDate is null");

|

Query q = em.createQuery("update File f set f.deleteDate = :dateNow where f.documentId = :documentId and f.deleteDate is null");

|

||||||

q.setParameter("documentId", id);

|

q.setParameter("documentId", id);

|

||||||

q.setParameter("dateNow", dateNow);

|

q.setParameter("dateNow", dateNow);

|

||||||

q.executeUpdate();

|

q.executeUpdate();

|

||||||

@ -179,10 +179,10 @@ public class DocumentDao {

|

|||||||

*/

|

*/

|

||||||

public Document getById(String id) {

|

public Document getById(String id) {

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

Query q = em.createQuery("select d from Document d where d.id = :id and d.deleteDate is null");

|

TypedQuery<Document> q = em.createQuery("select d from Document d where d.id = :id and d.deleteDate is null", Document.class);

|

||||||

q.setParameter("id", id);

|

q.setParameter("id", id);

|

||||||

try {

|

try {

|

||||||

return (Document) q.getSingleResult();

|

return q.getSingleResult();

|

||||||

} catch (NoResultException e) {

|

} catch (NoResultException e) {

|

||||||

return null;

|

return null;

|

||||||

}

|

}

|

||||||

@ -199,9 +199,9 @@ public class DocumentDao {

|

|||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

|

|

||||||

// Get the document

|

// Get the document

|

||||||

Query q = em.createQuery("select d from Document d where d.id = :id and d.deleteDate is null");

|

TypedQuery<Document> q = em.createQuery("select d from Document d where d.id = :id and d.deleteDate is null", Document.class);

|

||||||

q.setParameter("id", document.getId());

|

q.setParameter("id", document.getId());

|

||||||

Document documentDb = (Document) q.getSingleResult();

|

Document documentDb = q.getSingleResult();

|

||||||

|

|

||||||

// Update the document

|

// Update the document

|

||||||

documentDb.setTitle(document.getTitle());

|

documentDb.setTitle(document.getTitle());

|

||||||

@ -237,7 +237,6 @@ public class DocumentDao {

|

|||||||

query.setParameter("fileId", document.getFileId());

|

query.setParameter("fileId", document.getFileId());

|

||||||

query.setParameter("id", document.getId());

|

query.setParameter("id", document.getId());

|

||||||

query.executeUpdate();

|

query.executeUpdate();

|

||||||

|

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

|

|||||||

@ -5,8 +5,8 @@ import com.sismics.docs.core.dao.dto.DocumentMetadataDto;

|

|||||||

import com.sismics.docs.core.model.jpa.DocumentMetadata;

|

import com.sismics.docs.core.model.jpa.DocumentMetadata;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import jakarta.persistence.EntityManager;

|

||||||

import javax.persistence.Query;

|

import jakarta.persistence.Query;

|

||||||

import java.util.ArrayList;

|

import java.util.ArrayList;

|

||||||

import java.util.List;

|

import java.util.List;

|

||||||

import java.util.UUID;

|

import java.util.UUID;

|

||||||

|

|||||||

@ -4,12 +4,16 @@ import com.sismics.docs.core.constant.AuditLogType;

|

|||||||

import com.sismics.docs.core.model.jpa.File;

|

import com.sismics.docs.core.model.jpa.File;

|

||||||

import com.sismics.docs.core.util.AuditLogUtil;

|

import com.sismics.docs.core.util.AuditLogUtil;

|

||||||

import com.sismics.util.context.ThreadLocalContext;

|

import com.sismics.util.context.ThreadLocalContext;

|

||||||

|

import jakarta.persistence.EntityManager;

|

||||||

|

import jakarta.persistence.NoResultException;

|

||||||

|

import jakarta.persistence.Query;

|

||||||

|

import jakarta.persistence.TypedQuery;

|

||||||

|

|

||||||

import javax.persistence.EntityManager;

|

import java.util.Collections;

|

||||||

import javax.persistence.NoResultException;

|

|

||||||

import javax.persistence.Query;

|

|

||||||

import java.util.Date;

|

import java.util.Date;

|

||||||

|

import java.util.HashMap;

|

||||||

import java.util.List;

|

import java.util.List;

|

||||||

|

import java.util.Map;

|

||||||

import java.util.UUID;

|

import java.util.UUID;

|

||||||

|

|

||||||

/**

|

/**

|

||||||

@ -47,10 +51,9 @@ public class FileDao {

|

|||||||

* @param limit Limit

|

* @param limit Limit

|

||||||

* @return List of files

|

* @return List of files

|

||||||

*/

|

*/

|

||||||

@SuppressWarnings("unchecked")

|

|

||||||

public List<File> findAll(int offset, int limit) {

|

public List<File> findAll(int offset, int limit) {

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

Query q = em.createQuery("select f from File f where f.deleteDate is null");

|

TypedQuery<File> q = em.createQuery("select f from File f where f.deleteDate is null", File.class);

|

||||||

q.setFirstResult(offset);

|

q.setFirstResult(offset);

|

||||||

q.setMaxResults(limit);

|

q.setMaxResults(limit);

|

||||||

return q.getResultList();

|

return q.getResultList();

|

||||||

@ -62,28 +65,38 @@ public class FileDao {

|

|||||||

* @param userId User ID

|

* @param userId User ID

|

||||||

* @return List of files

|

* @return List of files

|

||||||

*/

|

*/

|

||||||

@SuppressWarnings("unchecked")

|

|

||||||

public List<File> findByUserId(String userId) {

|

public List<File> findByUserId(String userId) {

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

Query q = em.createQuery("select f from File f where f.userId = :userId and f.deleteDate is null");

|

TypedQuery<File> q = em.createQuery("select f from File f where f.userId = :userId and f.deleteDate is null", File.class);

|

||||||

q.setParameter("userId", userId);

|

q.setParameter("userId", userId);

|

||||||

return q.getResultList();

|

return q.getResultList();

|

||||||

}

|

}

|

||||||

|

|

||||||

|

/**

|

||||||

|

* Returns a list of active files.

|

||||||

|

*

|

||||||

|

* @param ids Files IDs

|

||||||

|

* @return List of files

|

||||||

|

*/

|

||||||

|

public List<File> getFiles(List<String> ids) {

|

||||||

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

|

TypedQuery<File> q = em.createQuery("select f from File f where f.id in :ids and f.deleteDate is null", File.class);

|

||||||

|

q.setParameter("ids", ids);

|

||||||

|

return q.getResultList();

|

||||||

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Returns an active file.

|

* Returns an active file or null.

|

||||||

*

|

*

|

||||||

* @param id File ID

|

* @param id File ID

|

||||||

* @return Document

|

* @return File

|

||||||

*/

|

*/

|

||||||

public File getFile(String id) {

|

public File getFile(String id) {

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

List<File> files = getFiles(List.of(id));

|

||||||

Query q = em.createQuery("select f from File f where f.id = :id and f.deleteDate is null");

|

if (files.isEmpty()) {

|

||||||

q.setParameter("id", id);

|

|

||||||

try {

|

|

||||||

return (File) q.getSingleResult();

|

|

||||||

} catch (NoResultException e) {

|

|

||||||

return null;

|

return null;

|

||||||

|

} else {

|

||||||

|

return files.get(0);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -92,15 +105,15 @@ public class FileDao {

|

|||||||

*

|

*

|

||||||

* @param id File ID

|

* @param id File ID

|

||||||

* @param userId User ID

|

* @param userId User ID

|

||||||

* @return Document

|

* @return File

|

||||||

*/

|

*/

|

||||||

public File getFile(String id, String userId) {

|

public File getFile(String id, String userId) {

|

||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

Query q = em.createQuery("select f from File f where f.id = :id and f.userId = :userId and f.deleteDate is null");

|

TypedQuery<File> q = em.createQuery("select f from File f where f.id = :id and f.userId = :userId and f.deleteDate is null", File.class);

|

||||||

q.setParameter("id", id);

|

q.setParameter("id", id);

|

||||||

q.setParameter("userId", userId);

|

q.setParameter("userId", userId);

|

||||||

try {

|

try {

|

||||||

return (File) q.getSingleResult();

|

return q.getSingleResult();

|

||||||

} catch (NoResultException e) {

|

} catch (NoResultException e) {

|

||||||

return null;

|

return null;

|

||||||

}

|

}

|

||||||

@ -116,9 +129,9 @@ public class FileDao {

|

|||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

|

|

||||||

// Get the file

|

// Get the file

|

||||||

Query q = em.createQuery("select f from File f where f.id = :id and f.deleteDate is null");

|

TypedQuery<File> q = em.createQuery("select f from File f where f.id = :id and f.deleteDate is null", File.class);

|

||||||

q.setParameter("id", id);

|

q.setParameter("id", id);

|

||||||

File fileDb = (File) q.getSingleResult();

|

File fileDb = q.getSingleResult();

|

||||||

|

|

||||||

// Delete the file

|

// Delete the file

|

||||||

Date dateNow = new Date();

|

Date dateNow = new Date();

|

||||||

@ -138,9 +151,9 @@ public class FileDao {

|

|||||||

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

EntityManager em = ThreadLocalContext.get().getEntityManager();

|

||||||

|

|

||||||

// Get the file

|

// Get the file

|

||||||

Query q = em.createQuery("select f from File f where f.id = :id and f.deleteDate is null");

|

TypedQuery<File> q = em.createQuery("select f from File f where f.id = :id and f.deleteDate is null", File.class);

|

||||||

q.setParameter("id", file.getId());

|

q.setParameter("id", file.getId());

|

||||||

File fileDb = (File) q.getSingleResult();

|

File fileDb = q.getSingleResult();

|

||||||

|

|

||||||

// Update the file

|

// Update the file

|

||||||

fileDb.setDocumentId(file.getDocumentId());

|